We are exhilarated to announce that eInfochips will be showcasing two cutting-edge demos at the upcoming NVIDIA GTC event! The first Demo is JetCarrier96, a reference design for OEM customers looking to create a custom carrier board for the NVIDIA Jetson Edge AI and Robotics platform. This board features the latest technologies commonly found in advanced and simplified carrier board designs, allowing customers to choose the sub-systems that best suit their needs. We will also be showcasing the Arrow SmartRover AMR reference design at the event. This platform simplifies the development of autonomous mobile robots by providing a ready-to-use solution for testing robot navigation, object detection, collision avoidance, and indoor scene mapping capabilities.

And that’s not all – we will also be hosting two webcasts at the event to dive deeper into the latest trends and insights from Autonomous Mobile Robots.

Key Customer Engagements

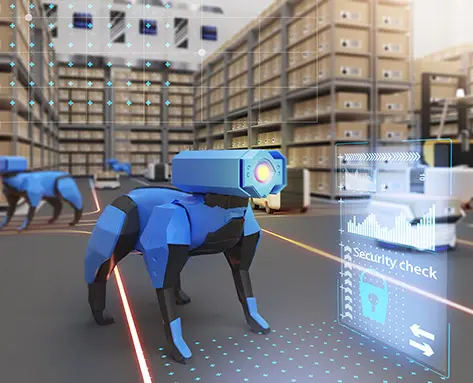

Overview – Companies are turning to autonomous mobile robots (AMRs) to streamline repetitive and physically demanding tasks. With the integration of artificial intelligence and machine learning, AMRs are becoming even more intelligent and adaptive. At eInfochips, we have a Robotics Center of Excellence (CoE) with a skilled team of experts in relevant platforms and technologies. Our team has successfully developed multiple AMR proof-of-concepts and complete solutions for our clients.

Key Highlights

- Trends and considerations for Robotics

- Key Technologies and Components

- Use case and solutions

Overview – Computer vision is a field of artificial intelligence (AI) that enables computers to understand images and videos and derive meaningful information to perform meaningful actions. Computer vision trains machines to perform these functions, but it must do it in much less time with cameras, data, and algorithms. In the past, computer vision was limited to 2D images, but thanks to advancements in technology, it has now expanded to 3D! This means that machines can now perceive depth and dimensions, allowing for even more accurate and sophisticated analysis.

Key Highlights

- Trends and considerations for Computer Vision

- 2D vs. 3D Computer Vision

- Use case and solutions

NVIDIA GTC Event 2023

WEBCAST 1

Presenters

Amit Gupta

Amit is currently leading the robotics center of excellence and is part of a solutions team based in eInfochips’s Noida, India location. Amit has over 23 years of experience in the semiconductor/electronics industry, handling stages from innovation to monetization. He has conceived complete products and solutions involving cross-functional teams (hardware, firmware, mechanical, cloud, app, and certifications) to achieve ROI for customers in edge AI, robotics, industry4.0, internet of things, smart utility, telehealth, and asset tracking.

Harsh Vardhan Singh

Harsh Vardhan Singh holds an M.S. in computer science from Georgia Institute of Technology with machine learning as the specialization (OMSCS). He’s a solutions research engineer who’s currently working on sensor fusion and the navigation stack within the ROS 2 environment for an autonomous mobile robot proof-of-concept. Harsh has around seven years of industrial experience, with an initial six years spent in the Research and Innovation Labs. He’s worked on multiple projects involving applications of computer vision, AI, deep learning, machine learning, reinforcement learning, and robotics.

Man Mohan Tripathi

Strong engineering professional with a Master of Technology (MTech.) focused in Artificial Intelligence from University of Hyderabad. ManMohan has more than 8 year of experience in Deep Learning, Computer Vision and Internet of Things (IoT). His current areas of interest are 3D Computer Vision, Depth imaging, Deep Neural Network. He is working with eInfochips Inc as a Solution Engineer.

Pallavi Pansare

Pallavi Pansare is currently working as Solution Engineer at eInfochips with over 4 years of expertise in fields such as signal processing, image processing, computer vision, deep learning, and machine learning. She holds master’s degree in signal processing and currently pursuing her Ph.D. in artificial intelligence. She has worked on AI/ML projects related to image super resolution, image segmentation, and currently working on 3D object detection.

Related Blogs

Sensor Fusion – Part 1

This blog is the first part of a two-part series in which we will introduce the usage of sensor fusion in Autonomous Mobile Robots (AMRs) that are enabled to work with ROS 2 (Robot Operating System 2). In this part, we will introduce sensor fusion, briefly cover a sensor fusion algorithm called EKF (Extended Kalman Filters), and then walk through some of the experiments we did in simulation and on our AMR.

Sensor Fusion – Part 2

This blog is the second part of a two-part series where we will introduce the usage of sensor fusion in Autonomous Mobile Robots (AMRs) that are enabled to work with ROS (Robot Operating System) 2. In the first part, we introduced sensor fusion, briefly covered a sensor fusion algorithm called EKF (Extended Kalman Filters), and then walked through some of the experiments we did in simulation and on our AMR.

From Concept to Completion: Streamlining 3D Scene Creation for Robotics with Nvidia Isaac Sim and Blender

The article discusses the benefits and challenges of combining Blender and Nvidia Isaac Sim to create realistic simulations for robotics applications. By integrating Blender’s 3D modeling and animation capabilities with Nvidia Isaac Sim’s simulation software, more detailed and accurate models can be developed for testing and refining algorithms. Additionally, synthetic data generated from Nvidia Isaac Sim can be used to train deep perception models, which reduces the time and effort required to gather and annotate datasets.

Control your robot using micro-ROS and NVIDIA Jetson kit

ROS is an open-source framework that simplifies the development of complex robotic applications. It utilizes a distributed architecture that facilitates communication between various software components within a robot’s system.

AMR Navigation Using Isaac ROS VSLAM and Nvblox with Intel Realsense Camera

Autonomous mobile robot (AMR) navigation relies heavily on visual SLAM, which stands for visual simultaneous localization and mapping. This technique uses a camera to estimate the robot’s position while simultaneously creating a map of the environment. Visual SLAM is crucial for Mars exploration devices, unmanned robots, endoscopy, and vacuum cleaning.

Training and Deployment of DL Model Using Simulated Data

Simulated data is increasingly popular for training and deploying deep learning models. Advances in simulation technology enable generating realistic synthetic data for applications such as robotics, autonomous vehicles, and computer vision. Although simulated data is cost-effective and efficient, deploying models in the real world requires careful consideration of transferability and generalization.

Autonomous navigation of AMR using RTAB-Map and ToF Camera

Exploration is a crucial aspect of autonomous navigation in robotics. Simultaneous Localization and Mapping (SLAM) is a fundamental technique that enables robots to navigate and map unknown environments. Visual Simultaneous Localization and Mapping, or Visual SLAM (VSLAM), is a specific type of SLAM that enables robots to map their surroundings and estimate their own position, i.e., odometry, in real-time using visual input from cameras.