Introduction

The problem we want to solve is to accurately estimate the state of a dynamic system. A dynamic system can be defined as a system whose state changes as time progresses. The state change could occur due to a natural evolution of the system or could also be stimulated by some external input. The dynamic system is mathematically represented by defining its state through a set of differential equations that represent how a given system propagates through time.

We call this the Motion/Process Model of our system. Given some input (not compulsory) to the system and observed measurements, it is possible to estimate the system state for the next time step. This is called state estimation. An example of such a system is an Autonomous Mobile Robot (AMR) moving in a warehouse or a lab.

Here, the state of the AMR would be its position w.r.t. some fixed origin, velocity, and acceleration. These state variables can be measured directly or mathematically deduced from sensor readings. But it is important to keep in mind that individual sensors have their own individual flaws. For example, a wheel encoder helps us count the number of rotations of a wheel. Using this information along with the AMR dynamics (Motion model), we can predict the new position of the AMR.

However, if the wheels slip, the wheel encoders would report extra wheel revolutions resulting in our prediction of the AMR’s state becoming erroneous. These kinds of problems are further exacerbated due to sensor noise and the physical environment. To compensate for the limitations of single sensors, measurements from multiple sensors can be combined in a way that results in better state estimation of the dynamic system. The process of combining multiple sensor data to reduce uncertainty or improve accuracy in the state estimation of a dynamic system is called Sensor Fusion.

Extended Kalman Filter

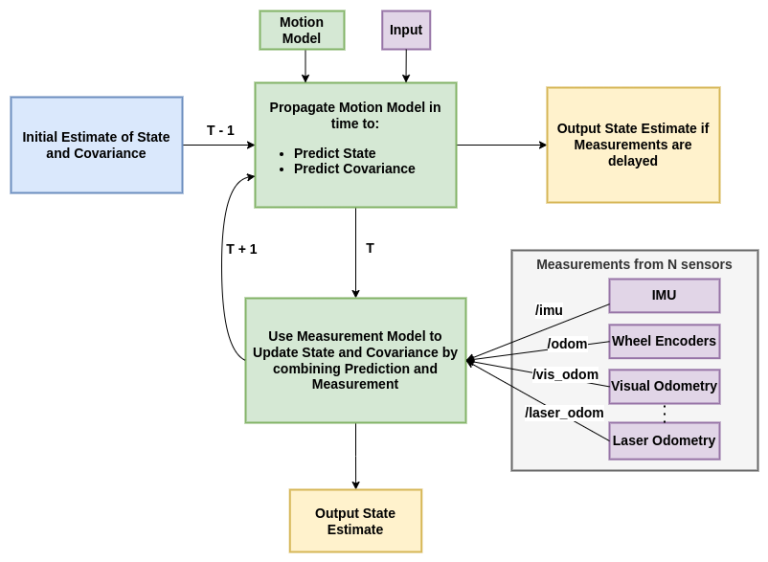

The Extended Kalman Filter (EKF) is a popular method for fusing data from multiple sensors. It is an extension of the well-known Kalman Filter (Linear) algorithm, which is used for state estimation of linear dynamic systems. An underlying assumption in Kalman filters is that each variable in our state is defined by a Gaussian distribution, and the sensor and process noise are zero-mean independent Gaussians. Figure 1 shows a block diagram of how sensor fusion can be achieved using a prediction and update cycle in Kalman Filters. Let us go through the steps to see how this works:

- We start with some initial state estimate, its corresponding covariance matrix, and a motion model of our system.

- Once we receive some input, we run our prediction step to compute our new state estimate and covariance by propagating our system forward in time using our motion model and the given input.

- When we receive measurements from different sensors like IMU (Inertial Measurement Units) and Wheel Encoders, we then update our state estimate and covariance by combining the previous prediction with the new measurements.

- The prediction and update steps keep running for the duration of our application.

When working with a nonlinear system like an AMR, we need to make a few changes to the Kalman Filter algorithm. To ensure that our state representation remains a Gaussian distribution after each prediction and update step, we need to linearize the motion and measurement models. This is done by using Taylor series to approximate the nonlinear models as linear models. This extension of the Kalman Filter is called the Extended Kalman Filter.

Sensor Fusion in ROS

One of the most popular ROS packages for performing sensor fusion using ROS is the robot_localization package. The robot_localization package is a general-purpose state estimation package with implementations of the Extended Kalman Filter and another variation of Kalman Filters called Unscented Kalman Filter. It has the flexibility to fuse many sensors and outputs, a 15-vector state estimate. The state estimate is composed of position, orientation, linear velocity, angular velocity, and linear acceleration in x, y, and z directions. The motion model provided by this package is omni-directional. It can be used for both global and local state estimation, given relevant data. More details about the package can be found at: http://docs.ros.org/en/noetic/api/robot_localization/html/index.html

Experiments in Gazebo

Gazebo is a ROS 2-enabled simulation environment that offers a lot of flexibility to test robotic systems. It allows us to introduce sensor noise, try different friction coefficients, and reduce wheel encoder accuracy, which allows us to replicate realistic scenarios that would affect the state estimation of an AMR in the real world.

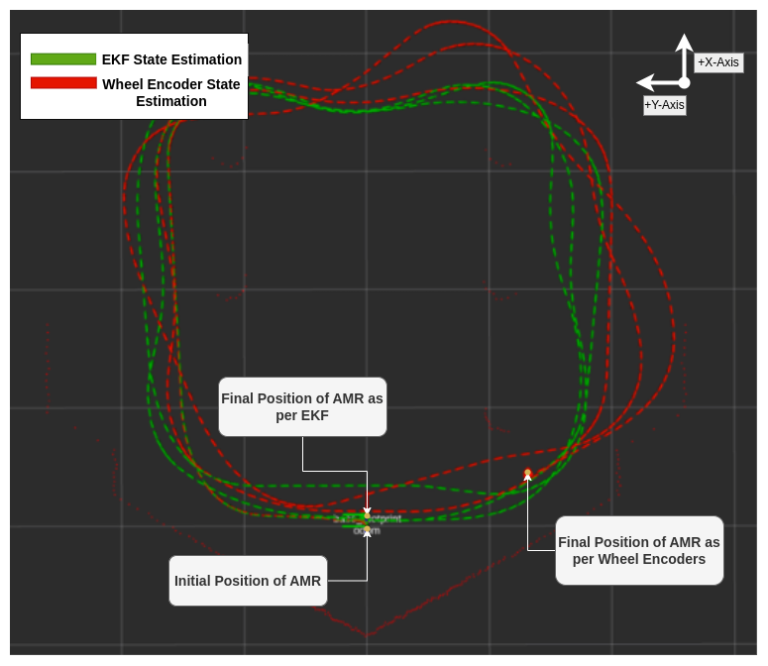

To quantify the performance of sensor fusion, we can run a loop closure test, which consists of running the AMR on a randomly closed trajectory such that the AMR’s initial and final location are the same. We can then plot the state estimation done by EKF after fusing IMU and wheel encoders and compare it with state estimation done from raw wheel encoders. Ideally, we want the estimated state of our AMR to report x, y, and yaw as (0, 0, 0), respectively.

We performed various experiments using the Turtlebot3 (TB3) packages. One such example is given in Figure 2. Here, we teleoperate TB3 around the Turtlebot3 world multiple times and finally bring it back to the starting position. As can be seen, the trajectory predicted by EKF, given in Green, can better estimate that the AMR is back to its initial position whereas using just the wheel encoders, given in Red, the estimate is off by around 1.4 meters. The raw wheel encoder data gives a final position error of 0.405m, -1.36m, and 29.72 degrees in x, y, and yaw, respectively. Whereas EKF estimated position error for x, y, and yaw is 0.103m, -0.0165m, and 0.0187 degrees. The overall percentage improvement in Euclidean distance with respect to the initial position was about 92.64%, from 1.42m when using just wheel encoder data to 0.10m after using EKF.

Figure 2: Loop closure test in Gazebo using Turtlebot 3

Experiments on AMR

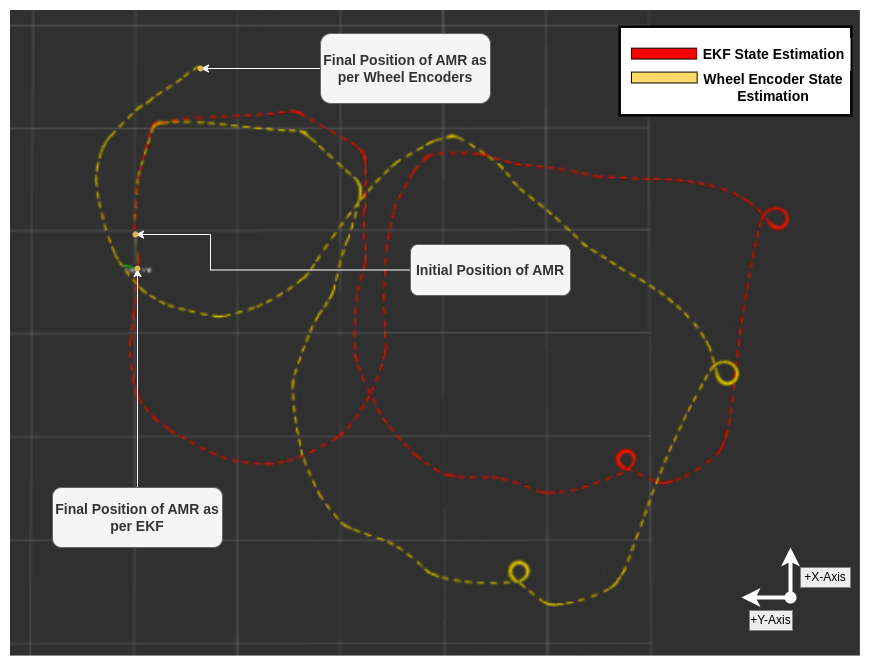

The experiments performed in Gazebo can also be extended to actual AMRs. We conducted these experiments on our office premises and were able to achieve reliable results by fusing our AMR’s IMU and wheel encoder data. An example run is given in Figure 3. When using only the wheel encoder data, the estimated state reported an error of 1.58m in the x-direction, -0.69m in the y-direction, and a yaw orientation error of 55 degrees. However, the EKF estimate was better, with errors of -0.35m, -0.033m, and -5.9 degrees in the x, y, and orientation, respectively. The overall percentage improvement in Euclidean distance with respect to the origin was approximately 78.9%, going from 1.699m to 0.35m after implementing the EKF.

Figure 3: Loop closure test on real AMR in office premises.

Conclusion

State estimation is a crucial step in providing a reliable odometry source to other modules of our AMR, such as navigation and mapping. Through our experiments, we have demonstrated that using sensor fusion techniques enables us to estimate the state of our AMR more accurately, both in simulation and in the real world. We employed Extended Kalman Filters, which are a widely used method for sensor fusion in non-linear systems. In Part 2 of this series, we will discuss how sensor fusion can enhance the navigation and mapping capabilities of our AMR.

Authors

Harsh Vardhan Singh

Solutions Engineer, eInfochips (An Arrow Company)

Harsh Vardhan Singh holds an M.S. in computer science from Georgia Institute of Technology with Machine Learning as the specialization. He is a Solutions Research Engineer who is currently working on Sensor Fusion and the Navigation Stack within the ROS 2 environment for an Autonomous Mobile Robot proof-of-concept. As of March 2023, Harsh has seven years of industrial experience, with an initial six years spent in Research and Innovation Labs. He’s worked on multiple projects like Autonomous Forklifts for Pallet Picking, Fleet Management for Multi-Robot-Systems, Bin Picking, Automated Palletization and Online Bin Packing which involved application of Computer Vision, AI, Deep Learning, Machine Learning,Reinforcement Learning and Robotics.

Mayank Mukesh

Embedded Engineer, eInfochips(An Arrow Company)

Mayank Mukesh completed Electronics & Electrical Engineering in 2022 with Hons in Power electronics and specialization in Electric Vehicles. He is an Embedded Engineer working on various aspects of Autonomous Mobile Robots like control systems, navigation, and sensor fusion within the ROS 2 framework. He is also adept in programming languages like C, C++, and Python.

Anisha Thakkar

Embedded Engineer, eInfochips (An Arrow Company)

Anisha Thakkar has completed her B.Tech in Electronics and Communications and Minor Specialization in Computer Science Engineering from Nirma University, Ahmedabad in 2022. She is currently working in the Robotics department with a focus on ROS 2, VSLAM and AI-ML algorithms. She also has working experience on various projects related to Image Processing, Embedded Systems, RTOS and Machine Learning.