Deep Learning has emerged as one of the most exciting subparts of Machine Learning and it is now becoming an integral part of various industries. If we have to understand Deep Learning, it is a computational model with multiple layers (inspired by how the human brain functions) that learns to perform various tasks such as classification, segmentation, language translation, and many more using tabular, images, text, audio, video. and other types of data.

Computational models are trained with the help of relevant training data. Deep learning does require a good amount of training data and high computing power, but they are so powerful that for many tasks they may be more accurate than humans. For example, an AI-based automated visual evaluation outperformed experts in identifying cervical precancer.

Deep Learning models are of different kinds, out of which the three main types are as follows:

- Artificial Neural Networks (ANN)

- Convolutional Neural Networks (CNN)

- Recurrent Neural Networks (RNN)

So what is Recurrent Neural Network?

Before we get to know Recurrent Neural Networks (RNN), we need to understand why there is a need for it. One of the biggest challenges with the traditional neural network was that it could not remember what it had learned in different iterations. This makes it impossible to identify data patterns and correlations and requires retraining with each iteration. This is where there is a need for a computational model that has “memory” of what it has learned before.

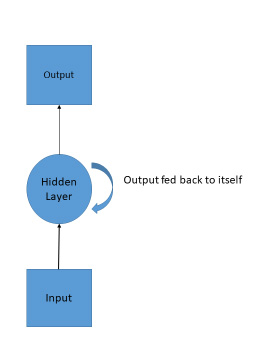

The main concept behind the Recurrent Neural Network (RNN) is that it uses sequential information where the output of the previous step is fed along with the input of the current step. In short, it is a series of inputs dependent on each other to derive the final output, unlike the traditional neural networks where inputs and outputs are independent of each other. For example, the above sentence has a meaning because words are arranged in a specific manner, if we move words out of its set order the meaning will change. The sequence matters.

In RNN, if you have to predict the next word in a sentence (output), you need to know the previous word. This is possible due to the “Hidden State”, which has access to already seen data in the sequence. RNN is suitable for data that is in the format of audio (speech recognition), video, time-series, and text.

What Can Recurrent Neural Network Do?

RNN has found great success in carrying when it comes to NLP. LSTMs are the most commonly used RNNs and it is considered to be better at capturing long term dependencies. LSTMs are pretty much the same as Recurrent Neural Network. However, we will not be delving into LSTM for now. Let us discuss what RNN can accomplish.

Speech Recognition: There is no denying that speech recognition is becoming an integral part of human-machine interactions. Many consumer electronic devices that we use now have inbuilt voice-enabled assistants. The key is to develop a model that is lightweight and has the potential to achieve maximum accuracy. The best way to start is to make use of the Google Speech Recognition dataset while applying Deep Learning for speech recognition. The input here will be a series of acoustic signals received verbally from a sound wave, and based on that it can predict an output sequence of phonetic segments together with their probabilities.

Language Models and Text Generation: With a given set or sequence of words, we need to predict the probability of each next word based on the previous words. A language model will help in measuring the probability, which is the input that will be used in Machine Translation. Therefore, when it comes to a language, a model is fed with a sequence or words (input) and the result is a sequence of predicted words or output. When you are typing something on your phone, you see three suggested words, which is the product of language model. One of the main aspects of predicting the next word is that we get a generative model, which allows us to generate new text by sampling from the output probabilities.

Machine Translation: This is similar to that of Language models. There the input in words is in sequence and the output of probable words are both in the source language. In machine translation, the output received is in the desired language to which we want our output. The output generation only starts once it receives the complete input (all words in sequence) to get more accurate translation or output.

Video Processing: When you have a series of sequential images such as a video, the classification can be done based on RNN. However, many experts would use a combination of CNN and RNN. If you are using the combination, you can use a pre-trained CNN model on some classification tasks like ImageNet. Once you attach a pre-trained model, you can feed the image through the CNN, then the last layer would be the input to each time-step of the RNN. You can then train the entire network with the loss function defined on the RNN. RNN can also be used to perform video captioning.

Advantages of RNN

- When it comes to time-series predictions, the reason RNN is successful because it can remember data it has been fed. However, it could be difficult to train an RNN.

- It can process inputs at any length.

- Even when the input sizes increases, the model does not increase in size.

- Weight sharing can be done across time-steps.

RELATED BLOG

Memory Testing: MBIST, BIRA & BISR | An Insight into Algorithms and Self Repair Mechanism

In the last few years, machines around have become more intelligent, allowing businesses to disrupt the market. eInfochips with their vast experience and expertise in AI and ML services can help businesses across the world to build highly complex intelligent products. To know more about our AI and ML capabilities, get in touch with us today.