What is real-time video stitching?

Real-time video stitching is a process of merging multiple video streams into a seamless panoramic or wider view with extremely low latency. This technique has gained application and prominence across domains due to its ability to deliver comprehensive visual experiences. Real-time video stitching uses image stitching but is more complex, as it requires frame synchronization, artifact compensation, and ultra-low processing times.

Where is Video Stitching Used?

Mixed Reality (VR/MR)

- Gaming, virtual storytelling in tourist destinations and theme parks

Surveillance and Security

- Smart city applications like traffic monitoring

- Big spaces such as train stations, shopping malls, airports, and stadiums

- Disaster response, rescue operations, and border security

Broadcasting

- Sports coaching

- Better viewing experience for live streaming of concerts, sports, weddings, and conferences

Automotive

- Advanced Driver-Assistance Systems (ADAS) for 360° views

- Improved parking and navigation assistance

Conventional Video Stitching Techniques: Overviews and Challenges

| Technique | Description | Challenges |

| Feature Matching | Uses algorithms like SIFT, SURF, or RANSAC to detect and match key features. | Struggles with dynamic scenes or low-texture areas. |

| Calibration-based | Aligns frames using known calibration patterns. | Requires precise setup; not ideal for moving setups. |

| Projection-based | Pixel-based mapping of frames on a geometric surface. | Introduction of distortions. |

| Blending-based | Merges overlapping regions by blending pixels. | May cause ghosting or blur. |

How Does a Typical Video Stitching Workflow Work?

- Data Acquisition - Capture videos from multiple cameras

- Preprocessing - Synchronize and align streams (Identify image pairs)

- Feature Matching - Detect and match key points.

- Image Alignment – Apply homography transformations.

- Blending - Merge frames by smoothing seams

One can explore an OpenCV based stitching example at OpenCV sample stitching source code. These need to be accelerated on GPU for real-time performance using OpenCL.

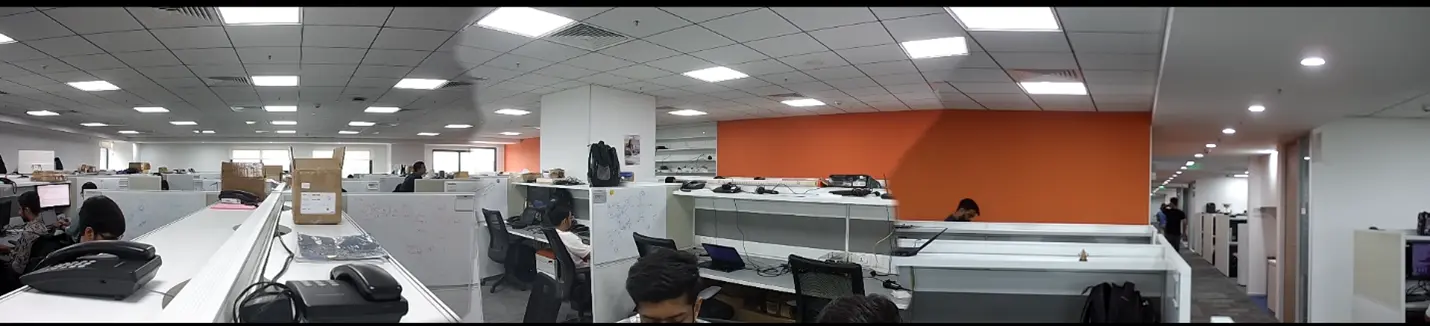

Results of multi-image stitching (based on dynamic seamline stitching) implemented using the CVFlow SDK on the Ambarella CV2 chestnut development platform are as below. We notice some shadow artifacts on the ceiling in this output.

What Challenges Arise in Video Stitching Applications and How Can You Fix It?

| Challenge | Solution |

| Stream Synchronization | Use timestamps or strobe/sync signals. |

| Image Alignment | Apply homography transformations. |

| Stitching Artifacts | Use alpha or Laplacian blending, motion compensation, and foreground detection. Calibrate cameras and stabilize video. |

| Latency | Use hardware acceleration (e.g., OpenCL, CUDA) for faster processing. |

Current Limitations and AI Integration

Most current systems rely on pre-calibrated camera parameters and conventional algorithms, which often lack accuracy in dynamic environments like ADAS or surveillance. Integrating AI-based methods can significantly improve performance.

How does AI Enhance Video Stitching?

AI models can adapt to complex scenarios like moving objects, lighting changes, and parallax effects. However, they require large datasets and high-performing embedded hardware.

Commonly used AI Models in Video Stitching applications are:

- CNNs – feature extraction and alignment

- GANs – Blending and artifact removal

- RNNs – Maintaining temporal consistency/artifact compensation

Popular Datasets

- Stanford 360 Video Dataset, Deep360 Dataset, SUN360 Dataset

Re-training standard models using custom datasets may be needed to improve efficiency of AI-based stitching. Online learning/incremental learning for AI models can be used if the system is connected to the Cloud to improve efficiency of AI models and hence, of the video stitching.

Deploying AI on Edge

Deploying AI on embedded platforms requires optimization considerations like:

- Model Quantization: Helps reduce model size and inference time with minimal degradation in accuracy

- Effective use of Edge AI frameworks namely TensorRT, TFLite and ONNX runtime

- Hardware and Software Component selection: Selection of lighter models and SoCs having dedicated Neural/Vector processing unit

- Hardware acceleration

Choosing the right System-on-Chip (SoC) depends on resolution, power, form-factor, and processing needs.

| SoC | Tools/Frameworks |

| NVIDIA Jetson Nano/Xavier/Orin | CUDA, VisionWorks, DeepStream |

| Qualcomm QCS Series | OpenCL, OpenGL ES, Hexagon SDK, GStreamer |

| TI AM62A/67A/68A | OpenGL 3.x, OpenCL, Vulkan API |

| Ambarella CV22/72/5S | CVFlow SDK |

Additionally, Novatek, Amlogic and Rockchip SoCs are also capable of driving such an application.

Performance Matrices to consider for Video Stitching on edge device:

- Latency (ms/frame) – Time taken to process and stitch each frame

- Throughput (FPS) – Stitched frames outputted per second

- Memory Usage – RAM and VRAM consumption per stitching operation

- CPU/GPU/NPU Utilization – Load on processing units

- Stitching Quality Metrics:

- SSIM (Structural Similarity Index)

- PSNR (Peak Signal-to-Noise Ratio)

- Temporal Consistency Score

- Artifact rate (ghosting, blurring, seams)

Methods to evaluate stitching performance

OpenCV

- Use cv::getTickCount() and cv::getTickFrequency() to measure processing time

- Evaluate image quality using cv::PSNR() and cv::compareSSIM()

GStreamer with Profiling Plugins

- Useful for pipeline-based stitching applications

- Plugins like gst-shark can help analyze latency and buffer handling

If AI models are used for video-stitching, TensorRT provides the inference time and memory usage.

Conclusion

Video stitching is an evolving technology that has potential to enhance safety and power immersive experiences across industries. While conventional methods provide a solid foundation, they present challenges in dynamic environments. AI-driven approaches offer a powerful upgrade to improve accuracy and reduce artifacts.

To deploy these solutions effectively in embedded systems, it is crucial to balance performance, latency, and power efficiency. Choosing the right SoC and leveraging hardware acceleration frameworks like CUDA, OpenCL, or CVFlow can make a significant difference.

As applications and demand grow, the solution lies in hybrid models—combining conventional techniques with AI that have been optimized for edge devices. This will unlock more reliable, efficient, and intelligent video stitching solutions.