Object localization is one of the image recognition tasks along with image classification and object detection. Though object detection and object localization are sometimes used interchangeably, they are not the same. Similarly, image classification and image localization are also two distinct concepts.

Different Object recognition tasks

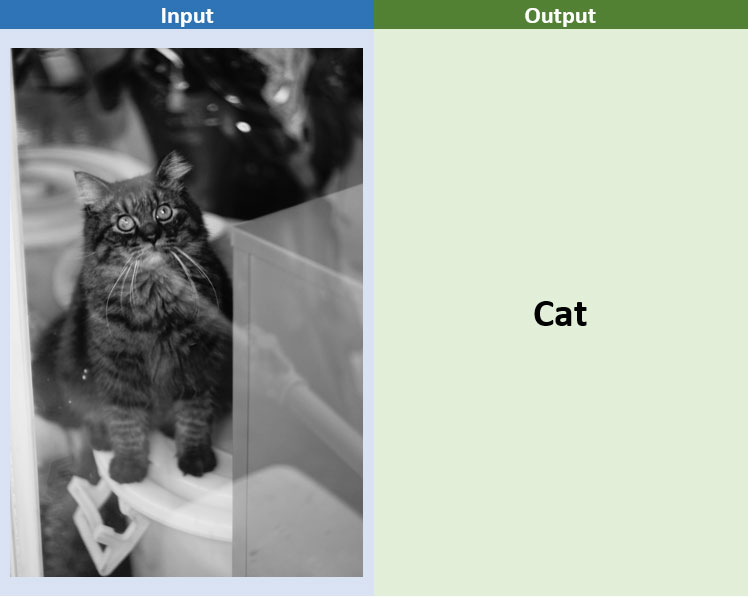

Image classification is a task where an image is classified into one or multiple classes based on the task.

Input: an image

Output: one or multiple class(es)

Image/Object localization is a regression problem where the output is x and y coordinates around the object of interest to draw bounding boxes.

Input: an image

Output: “x”, “y”, height, and width numbers around an object of interest

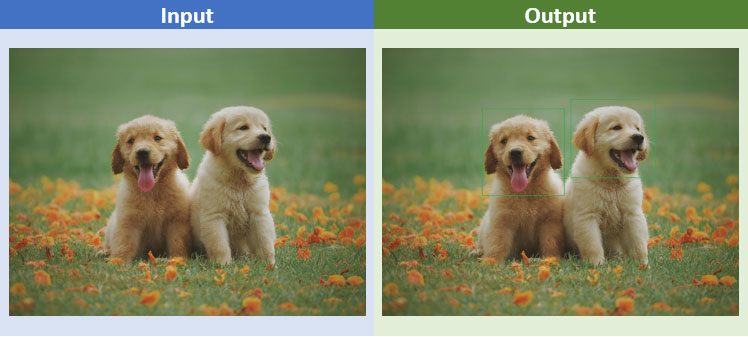

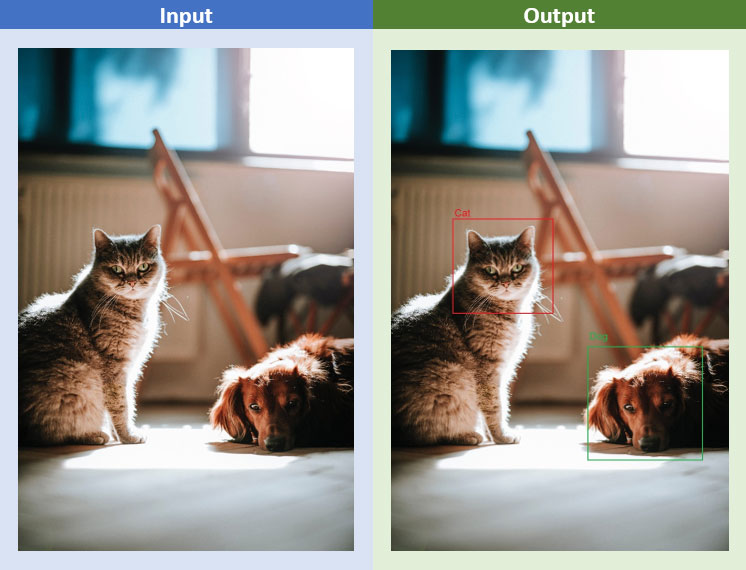

Object detection is a complex problem that combines the concepts of image localization and classification. Given an image, an object detection algorithm would return bounding boxes around all objects of interest and assign a class to them.

Input: an image

Output: “x”, “y”, height, and width numbers around all object of interest along with class(es)

While object detection is a complex problem that combines image localization and classification. Given an image, an object detection algorithm would return bounding boxes around all objects of interest and assign a class to them.

RELATED BLOG

What is image Localization and how is it different?

Image localization is a spin-off of regular CNN vision algorithms. These algorithms predict classes with discrete numbers. In object localization, the algorithm predicts a set of 4 continuous numbers, namely, x coordinate, y coordinate, height, and width, to draw a bounding box around an object of interest.

In CNN-based classifiers, initial layers are convolutional neural network layers ranging from a couple of layers to 100 layers (e.g., ResNet 101), and that depends on the application, amount of data, and computational resources available. The number of layers is itself a large area of research. After the CNN layers, there are a pooling layer and then one or two fully connected layers. The last layer is the output layer which gives a probability of an object being present in an image. For example, suppose an algorithm identifies 100 different objects in a given image. Then, the last layer gives an array length of 100 and values ranging from 0 to 1 that denote the probability of an object being present in an image.

In an image localization algorithm, everything is the same except the output layer. In classification algorithms, the final layer gives a probability value ranging from 0 to 1. In contrast, localization algorithms give an output in four real numbers, as localization is a regression problem. As discussed above, those four values are used to draw a box around the object.

The loss function for object localization

The aim of any machine learning algorithm is to predict as close as ground truth values. To do so any supervised machine learning algorithm uses loss function and algorithms learn by minimizing loss using weight or parameters optimization.

Object localization being a regression problem, any regression loss function applicable to an N-dimensional array can be used. For example, L1 distance loss, L2 distance, Huber loss, and so on. From the above, L2 distance loss is used widely by industry practitioners and research communities.

L2 distance

L2 distance is also known as Euclidean distance. It is a distance between two points in N-dimensional space and is calculated by applying the Pythagorean theorem on the cartesian coordinates of points. This approach works for any N-dimensional space.

To understand L2 distance, let us assume two points P and Q, in a three-dimensional space. Points P and Q are represented as (p1, p2, p3) and (q1, q2, q3) in the cartesian coordinate systems. The distance between those points is defined as:

Distance (P, Q) = sqrt ((p1 – q1) ² + (p2 – q2) ² + (p3 – q3) ²)

The optimization algorithm tries to minimize Euclidean distance between the ground truth and the predicted value; lower the L2 distance between the prediction and the ground truth, better the algorithm.

Evaluating Image Localization algorithm

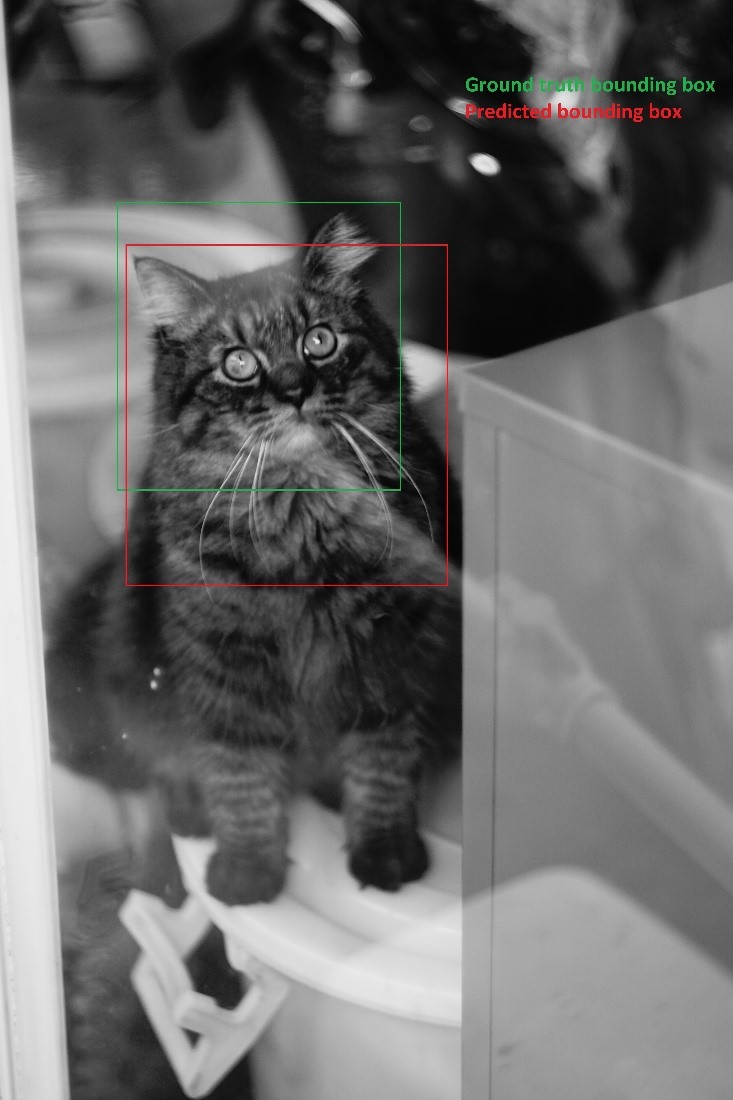

When we build machine learning models for the real world, we are required to evaluate such models to check their performance on unseen data points. With the same intention in mind, we rely on an evaluation metric to check the effectiveness of different models on real-world data points. For the Image localization task, IoU is the widely used evaluation metrics. IoU stands for Intersection over Union.

To calculate IoU, we consider both the ground truth bounding box and the predicted bounding box.

For example, consider the image below, the green box is the ground truth bounding box and the red box is the predicted bounding box.

IoU is simply the ratio of intersection and union, where the intersection is the area of overlap between the ground truth bound box and the predicted bounding box. In contrast, the union is the total area covered by both bounding boxes together.

IoU always ranges between 0 and 1. 1 is the ideal performance and it means prediction is the same as ground truth values and vice versa for 0. When the intersection area will be larger, IoU will be close to 1.

After evaluating metrics, here are a few other algorithms that industry practitioners widely prefer:

- R-CNN (region-based CNN)

- Fast and Faster R-CNN (improved version of R-CNN)

- YOLO (highly efficient object detection framework)

- SSD (single shot detectors)

Technical Insights into Advanced Object Localization Trends for 2024:

In the ever-evolving landscape of object localization within computer vision, 2024 is poised to witness profound technical advancements. Here are the cutting-edge trends anticipated to shape the industry

Attention Mechanisms and Transformers: The integration of attention mechanisms and transformer architectures into object localization models will gain momentum. These mechanisms enhance the models’ ability to focus on relevant image regions, facilitating higher precision and adaptability in complex scenes.

Graph Neural Networks (GNNs): GNNs will play an instrumental role in multi-object localization tasks. By modeling relationships between objects and their context, GNNs enable more accurate and context-aware object localization in cluttered environments.

Continual Learning with Few-Shot Adaptation: Object localization models will increasingly incorporate continual learning techniques with a focus on few-shot adaptation. This enables the seamless addition of new object classes to pre-trained models, a vital requirement for dynamic environments.

Privacy-Preserving Object Detection: Enhanced privacy concerns will lead to the development of privacy-preserving object localization methods. Edge-Optimized Object Localization: Edge computing will be central to real-time applications. Object localization models will be optimized for edge devices, making them efficient and responsive in IoT, drones, and robotics scenarios. This multi-modal approach will improve object localization accuracy, particularly in autonomous vehicles and multi-sensory robotics.

Spatiotemporal Localization: Advancements in spatiotemporal modeling will enable object localization models to track objects through time and predict their future positions.

Quantum Computing for Object Localization: The nascent field of quantum computing will continue to be explored for its potential to solve complex optimization problems in object localization exponentially faster, opening new frontiers in accuracy and speed.

Ethical AI Governance: Ethical and regulatory considerations will lead to the development of AI governance frameworks specific to object localization. Compliance with these frameworks will be a priority, ensuring responsible AI use in various industries.

Hyper-Personalized Object Localization: Customized object localization models tailored to specific domains or industries will gain traction. These models will adapt to unique environmental conditions, resulting in unparalleled accuracy.

From 2024, these technical trends are set to redefine the capabilities of object localization in computer vision, promising higher accuracy, adaptability, and ethical responsibility in an increasingly complex and data-sensitive world.

eInfochips, an Arrow company, has proven capabilities in the product engineering services domain, with competencies developed around emerging Digital technologies, such as AI/ML, Big Data, Mobility, Edge Computing, to name a few. Our team of experienced engineers has worked on a varied set of projects based on natural language processing, computer vision, anomaly detection, machine learning and is fully competent to address your business challenges. To more about services please contact our experts today.