Once a deep learning model is trained and ready to go into production, we might need to look into latency constraints depending upon the application. Specifically for real-time applications where latency requirements are in milliseconds, it requires to come up with a strategy to speed up inference.

Also nowadays, inference workload is being moved to the edge where hardware is not as powerful as the cloud. In this article, we will discuss a few strategies to speed up deep learning inference. We are going to discuss how to use available tools and techniques on NVIDIA and Snapdragon’s edge platforms. Before discussing strategies, let’s understand how inference works.

We save a trained model as a collection of weights (weights are the numbers that represent relation of neuron with its inputs) associated with every neural connection in a neural network. These weights are stored as floating points.

So for predicting a result of a new data point, it should be passed through this collection of weights called model. Here, each connection carries multiplication operation for a neuron value and corresponding weight value, then it calculates the activation value of individual neurons.

Thus, a new real-world data carries multiplication and activation operation layer by layer and neuron by neuron and; finally gives a prediction for the input.

These mathematical operations take time to process and below are few techniques which can reduce the time required for inference (latency) and increase the throughput of a trained neural network.

1. Weight pruning

If we take an insider look, there are few neurons that do not activate at all and that means they don’t impact output at all. On the other hand, few neurons’ activation strength could be very low and hence has a very small impact on the final output.

In weight pruning, we analyse the neurons and if they do not have much impact , then we can ‘prune’ those neurons.

There is one more way where we decide weight thresholds, and if weight associated with a neuron falls in that range, we simply, prune that neuron.

We might think that ‘pruning a neuron’ is a complex operation but it’s not. To prune a neuron, we just need to set incoming and outgoing weights as zero for that neuron. As incoming and outgoing weights are zero, that neuron won’t have any impact on the final output. Thus, pruning will make the model sparse which is easier to compress than a dense model.

After pruning, it’s advisable to fine-tune the neural network a bit before using it in production, particularly when the threshold range is slightly higher and we cared more about removing more weights.

Pruning would slightly decrease performance but will increase inference latency. According to the Deep Compression research, pruning can decrease the number of effective neurons by 9 to 13 times.

2. Quantization

Weights in neural networks are generally 32-bit floating points. Quantization is a technique where we quantize weights from 32-bit floating points to 16-bit floats or 8-bit integers depending upon the hardware. It is advisable to use 16-bit floating-point representation for GPU and 8-bit integer representation for CPU hardware.

Here the idea is to reduce the size of weights representing connections. It will also accelerate multiplication and activation operations. This technique requires further fine-tuning after quantization.

This will have little degradation in terms of accuracy but will reduce hardware latency, processing, power consumption, and model size.

3. Weight sharing

Weight sharing is a technique where we use fewer weights than the actual number of weights so that connections between neurons can share those weights. It’s kind of complex to understand, lets use an example.

Let’s say somewhere in a neural network, we have 2 fully connected layers.

Layer “k” has n neurons and layer “k+1” have m neurons. A corresponding weight matrix will be of size n rows and m columns. Here we have to store n*m values to store the weight matrix.

With weight sharing, we only need to store m values to pass the neural network to the next layer.

Here we can use a clustering algorithm like k-means to cluster n*m values into m groups. Each weight would belong to one of the m groups. Then we will find centroids for each group and will only use centroid values as a weight for all member of that group.

During backpropagation, all the gradients will be grouped based on original weight groups and centroid will be obtained for gradients as well. Gradient centroid values will be used for updating weight centroid values.

Inference on edge using NVIDIA Jetson platforms

NVIDIA Jetson builds edge embedded computers and has broadly four products under it, namely, Jetson TX1, Jetson TX2, Jetson Nano, and Jetson Xavier.

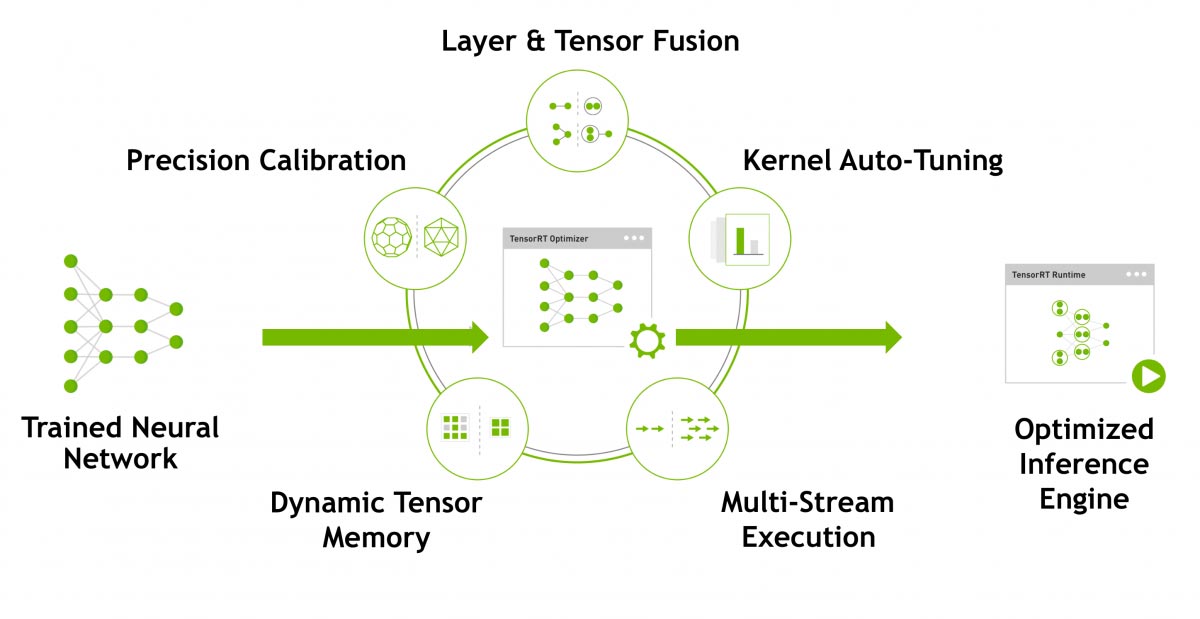

Most of these products have support for TensorRT. TensorRT is inference accelerator and is part of NVIDIA CUDA X AI Kit. It comes with a deep learning inference optimizer and runtime that delivers low latency for an inference operation.

TensorRT can take a trained neural network from any major deep learning framework like TensorFlow, Caffe2, MXNET, Pytorch, etc., and support quantization to provide INT8 and FP16 optimizations for production deployments.

Here is a good blog about how to use TensorRT with tensorflow model for speeding up inference.

Inference on edge using Snapdragon platform

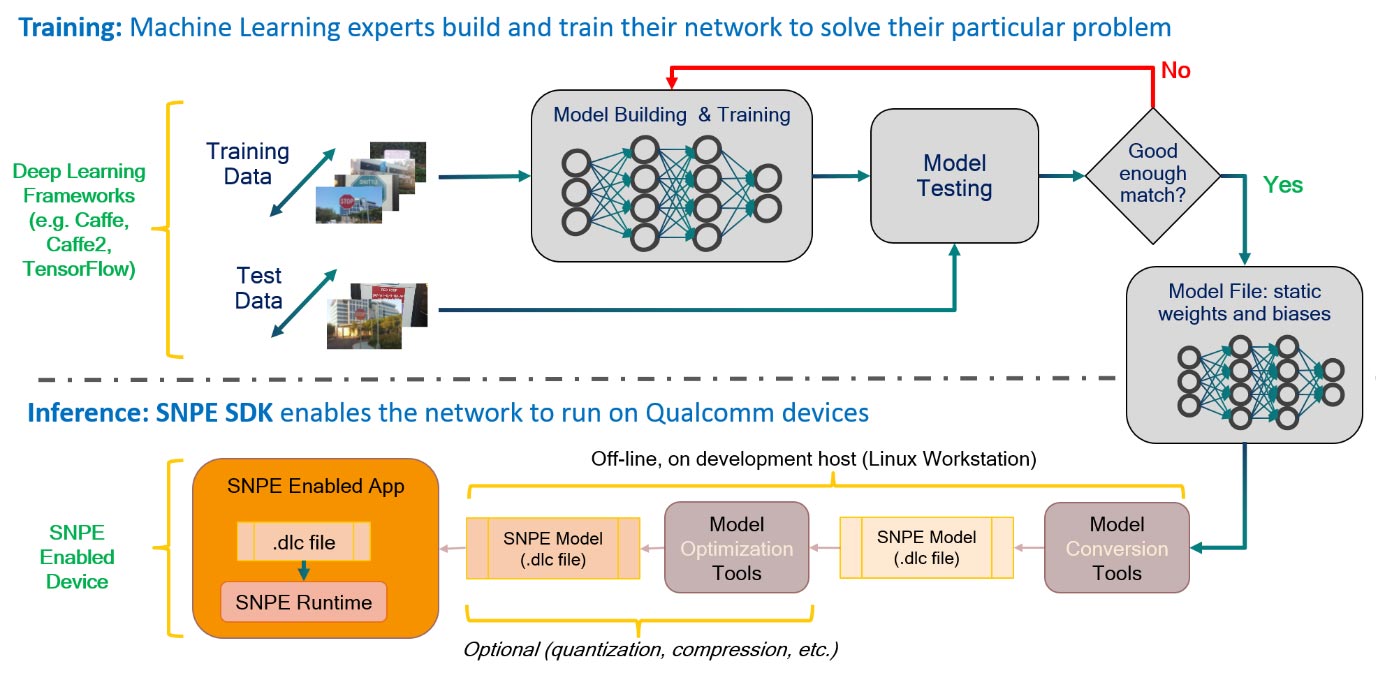

For Snapdragon edge platforms, Qualcomm provides a development kit called Snapdragon Neural Processing Engine (SNPE) SDK. SNPE is software accelerated runtime for executing neural networks.

Currently below Snapdragon platforms has support for SNPE:

Qualcomm Snapdragon 855, Qualcomm Snapdragon 845, Qualcomm Snapdragon 835, Qualcomm Snapdragon 821, Qualcomm Snapdragon 820, Qualcomm Snapdragon 710, Qualcomm Snapdragon 660, Qualcomm Snapdragon 652, Qualcomm Snapdragon 636, Qualcomm Snapdragon 630, Qualcomm Snapdragon 625, Qualcomm Snapdragon 605, Qualcomm Snapdragon 450

SNPE can take trained model from Caffe, Caffe2, ONNX and TensorFlow and converts into deep learning container (.dlc) file.

This .dlc file can be used for inference directly and we can also quantize it for int8 precisions depending upon the final application.

In a production environment, the platform will execute the .dlc model using SNPE runtime.

In this article, we discussed ways to speed up deep learning inference namely pruning, quantization and weight sharing. Apart from these strategies, we can use Huffman coding to further decrease the size of models. Inference latency on edge is very crucial to user experience and end-user won’t wait much to get results. We at eInfochips help our clients to bring intelligence to an edge by combining our hardware, IoT, and AI Expertise. Get in touch with us to know how we can fuel your next technological innovation.