An audio signal represents and describes the sound. Various audio features provide different aspects of the sound. We can use these audio features to train intelligent audio systems. We can extract a few features of the audio signals and then pass them on to the Machine Learning (ML) algorithms to identify patterns in the audio signals. It can help solve certain problems like classifying music files into various genres to offer context-aware music recommendations for personal use, and to build virtual composers to compose and create music. You can also use the extracted audio features to reduce noise and redundancy from the music files.

Audio Feature Extraction

The audio features are divided into three categories:

- High-Level Audio Features: These features include keys, chords, rhythm, melody, tempo, lyrics, genre, and mood.

- Mid-Level Audio Features: These features include pitch and beat level attributes such as note onsets (the start of a musical note), note fluctuation patterns, and MFCCs.

- Low-Level Audio Features: These features include amplitude envelope, energy, zero-crossing rate, and so on. These are generally the statistical features that get extracted from the audio.

Some audio features yield information on a 20-100 milliseconds time frame (short chunks of audio signals) and hence are called instantaneous features. Some yield information on a 2-20 sec time frame and are called segment-level features. These features give information about musical phrases or lyrics. The third category is called global features, and they provide information about the whole sound (algorithmic aggregate of features for the raw audio signals).

The audio feature extraction from time and frequency domains is required for manipulation of the signals to remove unwanted noise and balance the time-frequency ranges. The time domain-based feature extraction yields instantaneous information about the audio signals like the energy of the signal, zero-crossing rate, and amplitude envelope. The frequency domain-based audio feature extraction reveals the frequency content of the audio signals, band energy ratio, and so on. We use the time-frequency representation to calculate the rate of change in spectral bands of an audio signal.

Machine Learning and Audio Feature Extraction

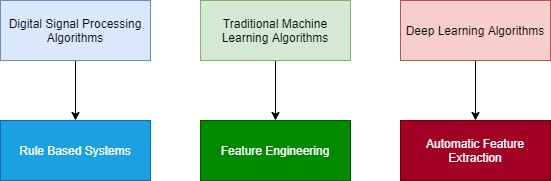

Once we have the audio features extracted, we can use either the traditional ML algorithms or Deep Learning (DL) algorithms to build an intelligent audio system.

The Traditional Machine Learning method takes into account most, if not all, time and frequency domain traits as model inputs. Features are manually selected based on their impact on performance. Commonly used inputs are – Amplitude Envelope, Zero-Crossing Rate, Root Mean Square Energy, Spectral Centroid, Band Energy Ratio and Spectral Bandwidth.

The Deep Learning approach leverages unstructured audio data such as spectrograms or MFCCs, identifying patterns independently. By the late 2010s, this became the preferred approach owing to its inherent automatic feature extraction. It is further enabled by the twin drivers of data abundance and enhanced compute power.

Intelligent audio systems are used for automatic music composition, creation, and categorization. Say, we intend to resolve an audio classification problem. For that, we can pick a few of the audio features extracted (using signal processing algorithms), the ones that we believe, can resolve the classification problem, and feed those features to the ML algorithms. For example, if we intend to find out/classify if a sound belongs to a gunshot or a motorbike engine, without any human intervention, we can use intelligent audio systems to do so. They are designed to classify/categorize sounds (sound classifier) and compose/create symphonies (virtual composer) with certain sounds, intelligently enough to pass the Turing test.

For this, we can use some audio features like Amplitude envelope, Zero-Crossing rate, and Spectral flux. We isolate them and extract them from the audio signals and then feed them into the traditional ML algorithms that we train to identify specific sounds. Once the ML algorithm is trained, it can be used to automatically categorize sounds or obtain inferences, whether the audio signals provided contain the sound of a gunshot or motorbike.

The difference between the traditional ML algorithms and the DL algorithms is that for the DL algorithms we provide unstructured data. Hence, for the DL, we pass the whole raw audio signals as a collection of features, or we may pass the spectrogram of the signal.

Conclusion

Recently, there has been a growing interest in categorizing music based on the emotion that a human can perceive on listening to that particular piece of music. Machine learning algorithms can help create a music playlist based on desired emotions. In conclusion, the word ‘intelligent’ represents observable and adaptive behavior based on changing environment. Intelligent Audio Systems too are getting into similar territory.

eInfochips provides system engineering and product engineering services in developing and optimizing algorithms for smart/intelligent audio and speech devices with signal processing, machine learning, and deep learning features. eInfochips also deals in embedded AI services providing concept to production services for all types of embedded products.

To know more about our embedded and AI-ML services please contact us today.