Audio processing is a subset of Digital Signal Processing. Audio signals are captured in digital format and certain properties of the human ear are used along with some mathematical techniques to achieve compression in order to store studio quality, high fidelity audio data on small disks or stream the data at lower bit rates.

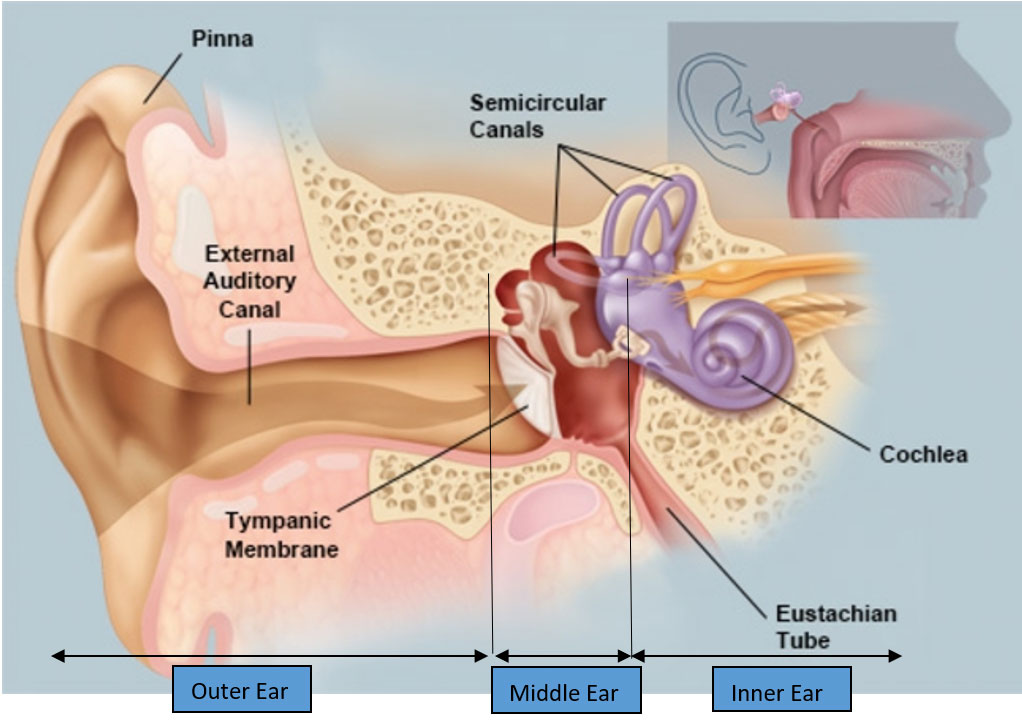

Anatomy of the Human Ear from the Audio Processing Perspective

Let us first understand how the ear acts as a spectrum analyzer. The ear is divided into three sections, namely: the outer ear, the middle ear, and the inner ear.

The outer ear terminates at the eardrum and the middle ear starts at the eardrum. The soundwaves entering the auditory canal of the outer ear are directed into the eardrum and cause the tympanic membrane (i.e the membrane in the ear drum) to vibrate. The vibration intensity depends on the frequencies contained in the sound wave and the power/loudness of those frequencies. The vibrations are transmitted by the middle ear to the cochlea. The cochlea is a part of the inner ear. The inner ear can be mathematically modelled using the “Digital Filter Model”, described in this article, below.

Figure 1: The Anatomy of the ear from the Audio Processing Perspective

(Courtesy: https://www.webmd.com/cold-and-flu/ear-infection/picture-of-the-ear#1)

The curved portion in the cochlea, in the picture above, contains the basilar membrane. Various points on the Basilar membrane in the longitudinal section of an uncoiled cochlea, are sensitive to certain audio frequencies and thus acts as a spectrum analyzer. The length of the basilar membrane is found to be approx. 3.5 cms after the cochlea is uncoiled.

Figure 2: The Anatomy of the Cochlea from the Audio Processing Perspective

(Courtesy: https://www.britannica.com/science/ear/Transmission-of-sound-within-the-inner-ear)

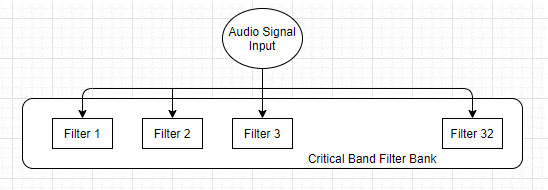

Digital Filter Model of the Basilar Membrane

We model small sections of this basilar membrane as digital filters. So all the basilar membrane can be represented as digital filters.

Figure 3: Digital Filter Model of the Basilar Membrane

The Middle ear portion in the above figure acts as a Band-Pass filter. The sound pressure in the basilar membrane is converted into electrical signals through filter bands as shown above. The output of the Middle ear (Band Pass Filter) is given to cascaded second order filters. There are 128 filters in the basilar membrane. Hence, for a signal to pass through all these filters, and get processed one by one, it will take too long to process in real time due to the group delay of all the 128 filters put together. The processing power required to implement these 128 filters too will be huge. In the human ear, the signal to travel from middle ear to the apex takes about 10 ms, but if we try to implement it, real-time, it is virtually impossible to get the output in real-time.

To get the above model, functioning, real-time, a “Parallel Filter Bank Model” was developed for digitally processing the Audio Signals. We know that each of those 128 filters processes a certain frequency band. Therefore, for achieving real-time capability, we process a signal using 32 filter banks, in parallel. These 32 filter bands are also called as critical band filters.

Figure 4: Critical Filter Bands

The human auditory system is modelled as a filter bank and the scale generally used for modelling the human ear is known as the Bark Scale where the center frequency is organized in a logarithmic manner.

Human Auditory Masking

The frequency range i.e. 20 Hz to 20 KHz is generally divided into logarithmically organized critical bands (Bark Bands). Experiments on the listening capabilities of the human ear have yielded certain results based on which the idea of Bark Bands came up. Complex signals were used for these experiments and human ear listening capabilities were analyzed based on masking of sounds, the perception of phase of sounds, and the loudness component in complex sounds.

Figure 5: Overlapping Bark Bands with respective Center Frequencies

(Courtesy: http://www-i6.informatik.rwth-aachen.de/web/Misc/Coding/365/li/material/notes/Chap4/Chap4.4/Chap4.4.html)

It was found that the signal components in a critical band (Bark Band) could be masked by other components in the same band. This phenomenon was called as “Intra-Band Masking”

It was found that the signal components in a critical band (Bark Band) could be masked by signal components in the nearby bands. This phenomenon was called as “Inter-Band Masking”

Therefore, if we consider the human auditory system as a frequency analysis device, one can approximate the audio frequency range into filter banks, consisting of overlapping Band-Pass filters.

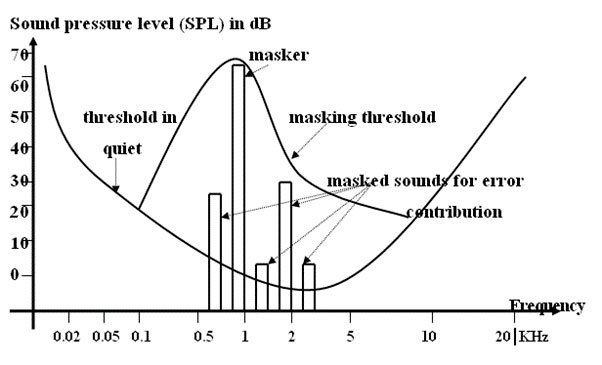

The Masking curve looks the same for all frequencies on a bark scale. The masking curve masks all the signals under the curve.

Masking can be described in two forms:

- Simultaneous Masking (Also known as ‘Frequency Masking’)An example of Simultaneous Masking could be, a person having conversation with another person and another person shouting on top of his voice passes by. In such a case, the human voice, which is low in volume gets masked by the sound of the loud voiced passerby.In music too, similar effects can be observed where different instruments can mask each other. The softer instruments are audible only when the louder instruments are played softly or are paused.As shown in the figure below, certain frequencies (within the vicinity of the masking curve) that have lower sound pressure level than the ‘masker’ frequency get silenced out and therefore, are inaudible.

Figure 6: Simultaneous Masking

(Courtesy: https://www.researchgate.net/figure/Figure-1-Threshold-in-quite-and-masking-threshold-acoustical-events-in-the-closed-curve_fig1_272655094)

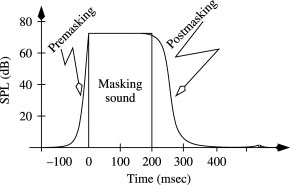

- Non-Simultaneous Masking (Also known as ‘Temporal Masking’)This happens in the time domain. Temporal Masking may appear within a small interval of time. The acoustic events under the curved areas (Pre-Masking and Post-Masking), in the figure below, will be masked.The Post-Masking phenomenon depends on the duration of the Masker.

Figure 7: Non-Simultaneous Masking (Temporal Masking)

(Courtesy: https://www.sciencedirect.com/topics/computer-science/masking-effect)

The masking effects are taken into consideration when dealing with the design of audio coding systems. (Psycho-Acoustic Model)

The MP3 Encoding technique uses Temporal Masking and Frequency Masking both, to reduce the output bit-rate. The signal information that is masked (in the vicinity of the Masker in both Frequency and Temporal Masking) is usually removed and only the ‘masker’ data is encoded thereby reducing the output bits. This is the crux of the Audio Encoding technique.

Wide Band Audio Coding

Data rate of a high fidelity stereo signal is about 1.4 Mbps for a 44.1 KHz sampling rate with 16 bits per sample uniform quantization. For low bitrate coding, the masking properties of human ear are used (as explained above) for removing the inaudible signals. Low Bit rate is achieved by first dividing the frequency range into a number of bands (as explained in the Bark Band section above), each of which is then processed independently. The psychoacoustic model (as explained in the Human Auditory Masking section above) results in data rates in the range of 2-4 bits per sample of 16-bits input. Sound is usually, processed independently for each channel.

First, the masking threshold is determined, then the redundant masked samples are discarded and the remaining samples are coded using a deterministic bit allocation algorithm to get a reduced bit-rate output for high-fidelity Audio signals. We discard the redundant information at the encoding side, therefore it cannot be reconstructed at the decoder output, and hence, we categorize this encoding-decoding technique as a lossy compression algorithm.

The above technique is used in MP3 and AAC Audio Codecs, which are the most frequently used in the Embedded Media Processing.

A few Audio Codecs are described as below:

- MP3: Most popular Audio coding standard in the Embedded media processing industry. It is officially known as MPEG-1 Audio Layer 3 standard from the Moving Picture Experts Group (MPEG) in the year 1992 as a complement to their MPEG-1 video standard. Polyphase digital filters are used to separate the input audio signal into sub-bands (Bark Bands), the Modified Discrete Cosine Transform (MDCT) is then used to convert the signal frame from time domain to frequency domain. Finally, the Psychoacoustic model is applied to remove the redundant portions of the audio signal (as explained above) and the frequency coefficients are encoded to compress the audio signal.

- AAC: Advanced Audio Coding is a second generation codec developed after MP3. It is similar to MP3 but achieves significantly better compression than the MP3.

- WMA: Microsoft has developed its own (proprietary) codec known as Windows Media Audio (WMA) as a competition to the MP3 standard for the paid music industry. Therefore, it also incorporates the DRM (Digital Rights Management) in the codec.

- Vorbis: It’s a royalty free lossy codec released by Xiph.org. This codec is being increasingly used in embedded media processing. It supports multi-channel compression and also uses techniques/algorithms to remove redundant information from nearby channels.

- FLAC: It is a royalty free lossless codec released by Xiph.org and does not use the psychoacoustic encoding techniques explained above. The Free Lossless Audio Codec (FLAC) does not provide as good a compression as the above codecs due to its lossless nature.

- AC-3: This audio codec was developed by Dolby Laboratories to handle multichannel audio.

Conclusion

Audio data compression using psychoacoustic properties has been a success in the embedded media-processing domain. A variety of compression algorithms have been created which allow for efficient audio codec (audio compression algorithm) implementation. The current audio codecs still have some room for improvements in terms of quality and bit-rates but the psychoacoustic model will remain an essential part of the codec implementation.

We at eInfochips provide software development services for DSP middleware i.e. Porting, Optimization, Support, and Maintenance solutions involving Speech, Audio, and Multimedia Codecs for various platforms.

eInfochips provides services like Integration, Testing, and validation of Multimedia codecs. We also cater to porting and optimizations for deep learning algorithms, algorithms for 3D Sound, Pre-processing and Post-processing of audio and video blocks. Implementation and parallelization of custom algorithms on multi-core platforms too are our forte.

For more information on audio processing please connect with us today.

References:

https://www.webmd.com/cold-and-flu/ear-infection/picture-of-the-ear#1

https://www.britannica.com/science/ear/Transmission-of-sound-within-the-inner-ear

https://en.wikipedia.org/wiki/Bark_scale

https://www.sciencedirect.com/topics/computer-science/masking-effect

http://www-i6.informatik.rwth-aachen.de/web/Misc/Coding/365/li/material/notes/Chap4/Chap4.4/Chap4.4.html