NVMe

Non Volatile Memory Express is a PCIe based protocol to access SSD (Solid State Device). The NVMe standard defines the Admin command set to set up the device and NVMe command set to communicate with the device.

NVMe is bound to grow, (Five Factors Fueling NVMe Growth) at present, captive to the server/desktops and eventually in the network using NVMe over fabric.

Device Availability

Many vendors have released PCIe-based adapters to use SSD storage using NVMe protocol. The list conforming to NVMe is available at UNH IOL Integrator list. IOL also provides the conformance test suite. However what if the device is not available to either do development or testing. For such cases qemu comes to rescue. The NVMe device is emulated in qemu and is available inbox in the latest versions of qemu.

One just need to provide the option to emulate the NVMe device while launching the boot up of the OS, like this

./qemu-system-x86_64 -m 2048 -hda /root/cow2_HD_16G -drive file=/root/nvme4M.img,if=none,id=D22 -device nvme,drive=D22,serial=1234 –boot c

Where cow2_HD_16G is the image file on which the OS is installed. And nvme4M.img is a raw image file which provides the storage capacity for the emulated NVME device.

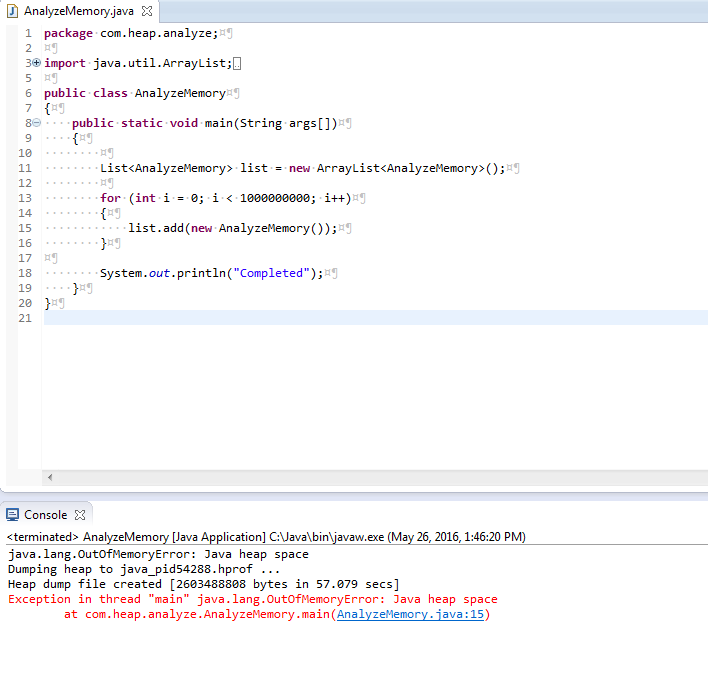

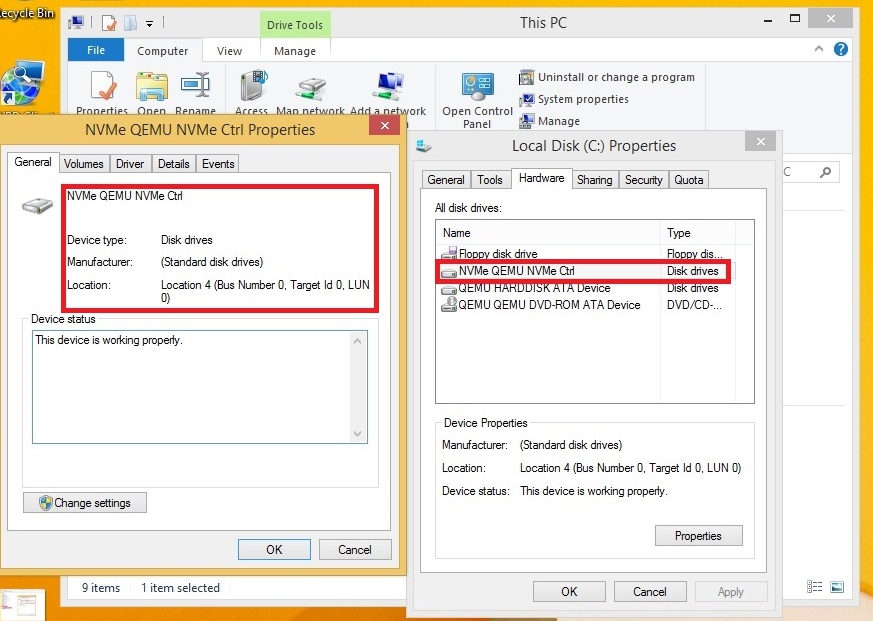

Following snapshots show the device details in Linux and Windows

Figure 1. NVMe Deivice emulated in qemu booted in Ubuntu 14.04

Figure 2. NVMe Deivice emulated in qemu booted in Windows 8.1

Without the actual device, one can use the emulated device to perform device driver development, applications and build test suites.

Test Automation in a flash

tnvme is a user space application that provides a nice framework to test the NVMe compliance of the devices implementing NVMe standard. tnvme is available at https://github.com/nvmecompliance/tnvme and needs the driver dnvme which is available at https://github.com/nvmecompliance/dnvme. Please note that dnvme is for testing compliance. To use the device as a storage device, both Linux and Windows 8.1 provide the inbox drivers.

The tnvme can be used from the console and one can use bash script to run the tests on the local machine. However to test remotely, STAF framework can be used. STAF along with STAX provide a way to quickly put together a test suite that can be run in parallel on several machines. STAF is available at http://staf.sourceforge.net/. STAF/STAX needs xml and python programming to execute the test and parse the results.

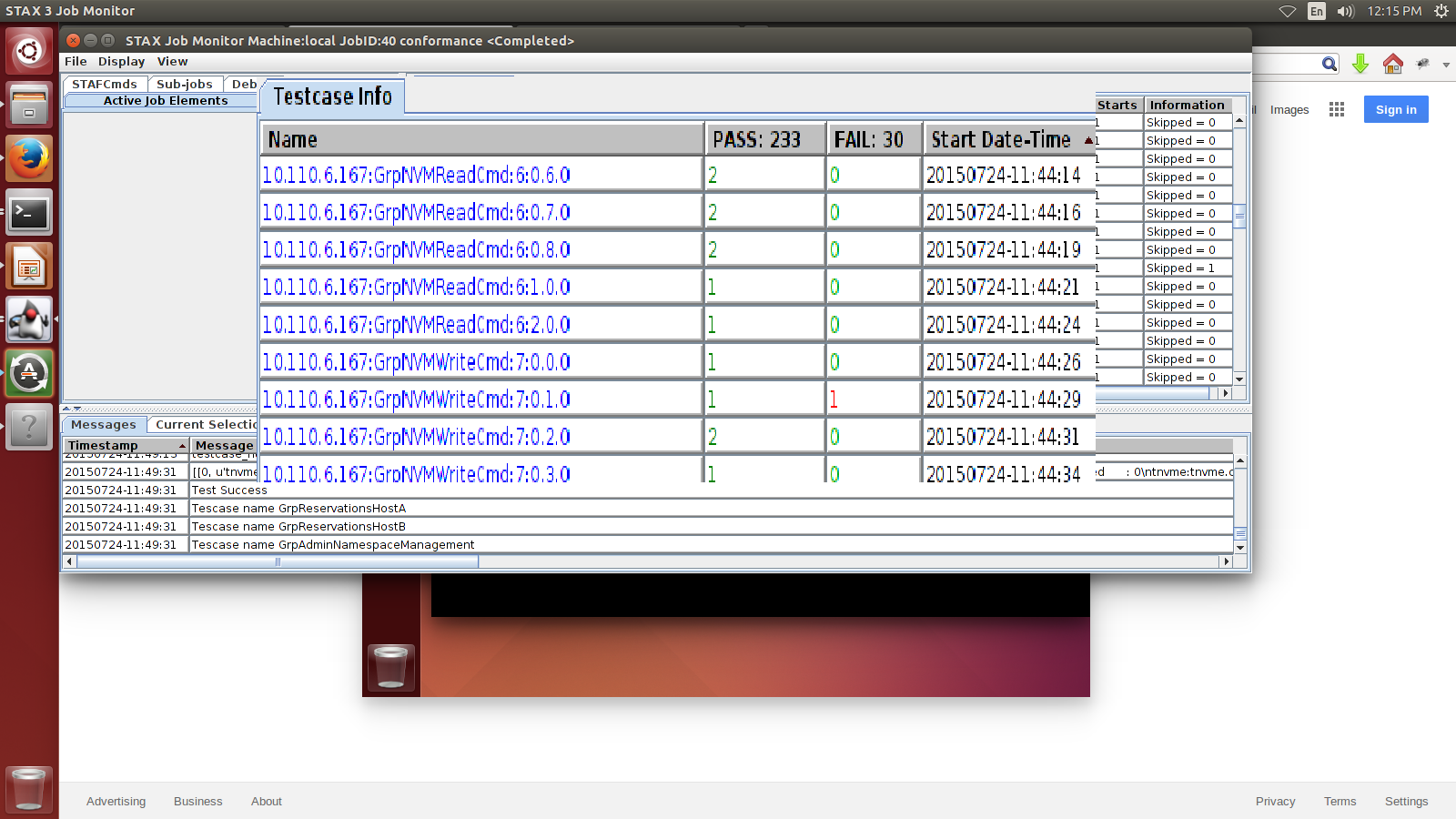

We have built the scripts to run the entire set of tnvme test cases, here is how it looks

Figure 3: Complete run of tnvme test cases

We have seed scripts to do the performance testing and normal IO testing. These scripts can be enhanced to add more tests in a flash!

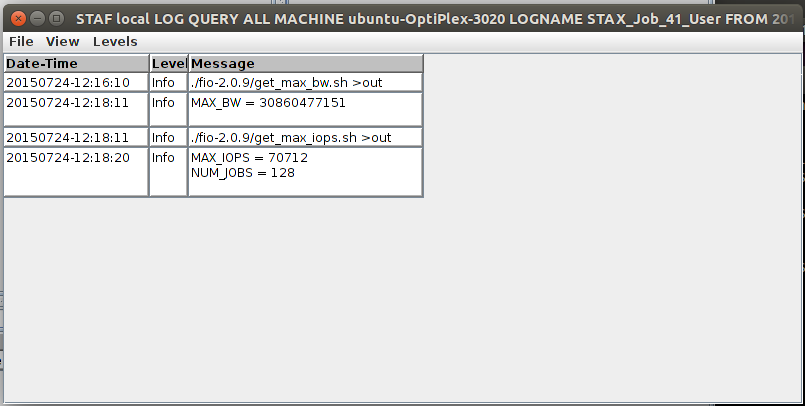

Figure 4: Output of performance tests

The scripts are designed in a way to vary different parameters to get the max performance number. For example, using fio, the num_jobs that is the number of threads can be varied to see the impact on the IOPS.

Beyond NVMe work is happening on NVMe over fabric. This will allow the server captive NVMe devices to be available in the network. This will need RDMA capable NIC cards.

Development on Open Channel SSDs is also happening in full swing. Open Channel SSDs allows part of functionality of the SSD controller to be moved to the host. The Open Channel SSD architecture also allows exposing the physical flash as block device or object store of any other format.

Our Expertise

- Firmware development & validation

- Linux/Windows Device Driver & Application Development for Storage Domain

- Applications for Security Protocols (Trusted Computing Group)

- Test Automation