Introduction:

The objective of the Structural Coverage Analysis (SCA) is to determine which code structure was exercised by the requirements-based test procedure. It is used to demonstrate that the software has been thoroughly tested to ensure that no unintended functionality can occur in the code. DO-178C, a key standard for avionics software development and certification, mandates structural coverage analysis to indicate that the software meets its requirements, is robust and operates reliably, which is critical for the safety of air travel. In cases where it is not possible, justifications should be provided. By examining which parts of the code are not covered by tests, developers can identify areas that might be untested and potentially contain bugs. Achieving satisfactory structural coverage is essential for compliance with DO-178C and obtaining the necessary certifications for avionics software.

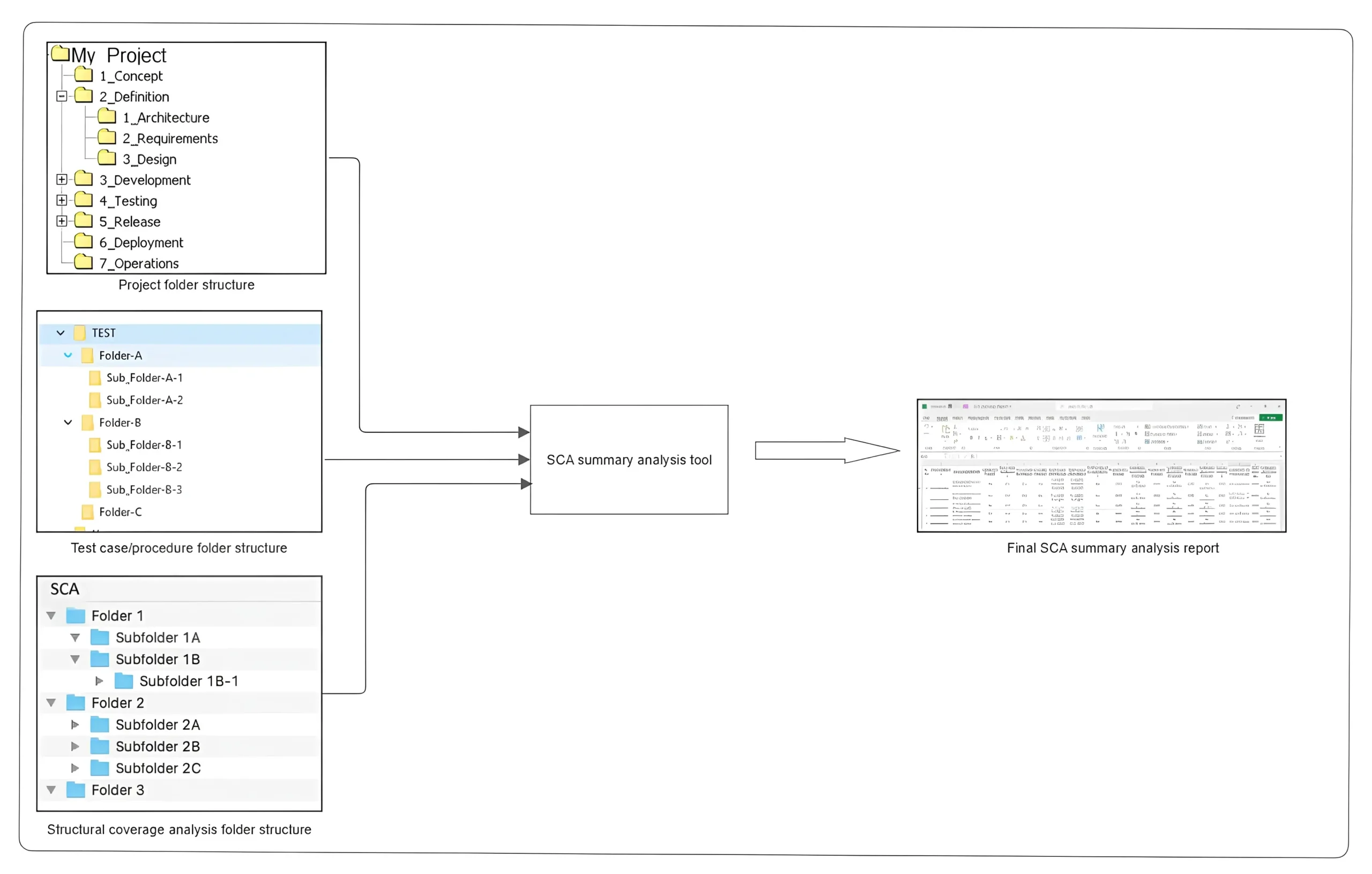

Verification Readiness Review (VRR) is a crucial process to ensure that avionics systems are ready for flight operations by evaluating their readiness against established requirements and standards like DO-178C. One of the key activities in VRR is collecting and analyzing evidence such as test results, analysis reports, and review documentation to support the readiness assessment. During VRR, structural coverage analysis reports are also analyzed, where the subversion revisions of the source code and tests are checked and compared to the report revisions. Additionally, we need to ensure that the report is generated based on the most recent source code and test revisions. Manually verifying each report takes more time. Our “SCA summary report tool” was designed to reduce time, effort, and resources involved in manually verifying each report.

Its main purpose is to reduce the user’s manual efforts in checking the subversion revisions of artifacts and revisions in results. The tool collects all the SCA reports, along with the subversion revisions of test cases and source code artifacts. Using the latest revisions of test cases and the source code from the repository, as well as the SCA report, the tool provides results of comparisons, and it determines whether or not the SCA has been produced for each source code.

Implementation Details:

The tool works in three stages: gathering data from the source code and test case repositories, processing it (comparing, cross-checking), and providing the results.

2.1. Inputs:

- Path to the project directory

- Path to the test case folder

- Path to the SCA report folder

2.2 Outputs:

CSV file

- Source code names and revisions

- Test case file names and its subversion revisions

- Revision comparison result

- Coverage percentage of function entry, function exit, statement, decision, MC-DC

- Justification for gaps related to function entry, function exit, statement, decision, MC-DC coverage

2.3 Functionality of the Tool:

- It takes three inputs, program path, test case/procedure folder path and SCA folder path.

- First, it takes a project path and checks if the user has entered the correct project path.

- It traverses through the test case/procedure folder and captures the test case name and corresponding subversion revision information.

- Further, it navigates through the SCA folder and checks if the SCA report has been generated for each source.

- It parses the SCA report and gathers all the required information such as test names used to generate the SCA report and their respective revisions, source code name and revision, function entries, function exits, statement, decision and MC-DC coverage, and the SVPR (Software Verification Cases, Procedures and Results) notes (justification provided for the gaps if any).

- Once information is parsed and collected, it captures those details in the .csv file, generated by the tool.

- The output .csv contains a comparison between the revisions of the source code in the subversion repository and the version in the SCA report. The note indicating whether the report is generated from the latest source code or not is updated based on the result.

- Similarly, the test case file’s revision is compared, and the note is updated.

Figure 2.1: Block representation of the SCA summary tool

Results:

Figure 3.1: Resulted summary of SCA reports (.csv)

Fields of CSV Generated:

| Field name | Contents |

| SourceFileName | Source code file names |

| SourceCodeFilePath | Source code file path |

| IsSCAReportPresent? | Checks if the requested SCA reports are available |

| SourceCodeSVNRevision | Source code revision from the subversion repository |

| SourceCodeRevInReport | Source code revision in the SCA report |

| SourceRevMatches? | A comparison between the version in the subversion repository and the version in the report for the source code |

| TestProcedureSVNRevision | Test procedure revision from the subversion repository |

| TestProcedureRevInReport | Test procedure revision in the SCA report |

| TestProcedureRevisionMatches? | A comparison between the version in the subversion repository and the version in the report for the Test Procedures |

| FunctionEntryCoverage | Coverage percentage for function entries |

| Justification_FunctionEntryCoverage | Justification for any function entry gaps |

| FunctionExitCoverage | Coverage percentage for the function exit |

| Justification_FunctionExitCoverage | Justification for any function exit gaps |

| StatementCoverage | Coverage percentage for statements |

| Justification_StatementCoverage | Justification for any statement gaps |

| DecisionCoverage | Coverage percentage for decisions |

| Justification_DecisionCoverage | Justification for any decision gaps |

| MCDCCoverage | Coverage percentage for MCDC |

| Justification_MCDCCoverage | Justification for any MCDC gaps |

Advantages:

- Reduces manual efforts of checking each SCA artifact, corresponding source code, and test procedures revision.

- The SCA tool requires less time to collect data from the requested SCA reports. It also saves the user’s time by consolidating all data and results in one centralized location.

Example, it requires data collected from two SCA reports. The following table shows the approximate time required to collect the data.

| Description | Approximate time in manual efforts | Approximate time by tool |

| Time to traverse through folders and find out the reports | ~2 mins | ~10 seconds |

| Comparing subversion revision of code from the report and the repository | ~5 mins | ~20 seconds |

| Comparing the subversion revision of test procedures from the report and the repository | ~5 mins (it may increase based on the number of test procedures) | ~30 seconds |

| Approximate time to cross verify other details such as justification, function entry, function exit, statement coverage percentage, and MCDC coverage | ~15 mins | ~1 min |

| Total turnaround time | ~ 27 mins | ~2 mins |

If the same data is acquired using a tool, comparison findings are immediately available, alongwith the information on whether the SCA is 100% or it needs to be regenerated. As a result, checking each artifact takes less than two minutes.

- With little modification, this tool can be used for different projects.

Conclusion:

The primary goal of designing this tool is to capture data from the SCA reports in one location and reduce manual efforts for reviewing the data. When there are large number of SCA artifacts, it takes longer to review each one separately. During the VRR process, where the SCA data is collected to ensure the code coverage, data is gathered in one place and the comparison results are readily available in this tool, making it easier to review data quickly.

Know More: Avionics Engineering Services