When it comes to identifying images, we humans can clearly recognize and distinguish different features of objects. This is because our brains have been trained unconsciously with the same set of images that has resulted in the development of capabilities to differentiate between things effortlessly.

We are hardly conscious when we interpret the real world. Encountering different entities of the visual world and distinguishing with ease is a no challenge to us. Our subconscious mind carries out all the processes without any hassle.

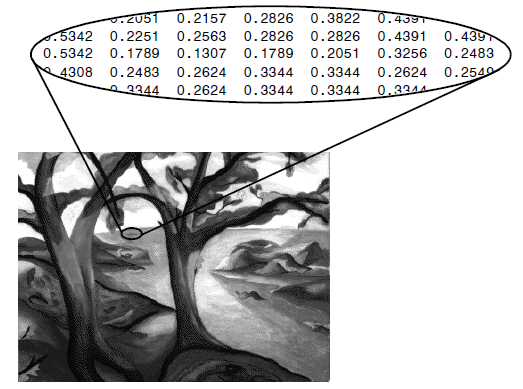

Contrary to human brains, computer views visuals as an array of numerical values and looks for patterns in the digital image, be it a still, video, graphic, or even live, to recognize and distinguish key features of the image.

The manner in which a system interprets an image is completely different from humans. Computer vision uses image processing algorithms to analyze and understand visuals from a single image or a sequence of images. An example of computer vision is identifying pedestrians and vehicles on the road by, categorizing and filtering millions of user-uploaded pictures with accuracy.

Market Capabilities and Scope of Computer Vision

Over the years, the market for computer-based vision has grown considerably. It is currently valued at USD 11.94 Billion and is likely to reach USD 17.38 Billion by 2023, at a CAGR of 7.80% between 2018 and 2023.

This is due to the increase in demand for autonomous and semi-autonomous vehicles, drones (military and domestic purpose) wearables, and smartphones. Moreover, the rising adoption of Industry 4.0 and automation in manufacturing industries has further stimulated the demand for Computer Vision.

Considering the growing potential of computer vision, many organizations are investing in image recognition to interpret and analyze data coming primarily from visual sources for a number of uses such as medical image analysis, identifying objects in autonomous cars, face detection for security purpose, etc.

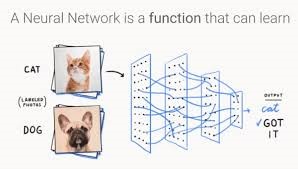

Image recognition is the ability of a system or software to identify objects, people, places, and actions in images. It uses machine vision technologies with artificial intelligence and trained algorithms to recognize images through a camera system.

Much fuelled by the recent advancements in machine learning and an increase in the computational power of the machines, image recognition has taken the world by storm.

Automotive, e-commerce, retail, manufacturing industries, security, surveillance, healthcare, farming etc., can have a wide application of image recognition.

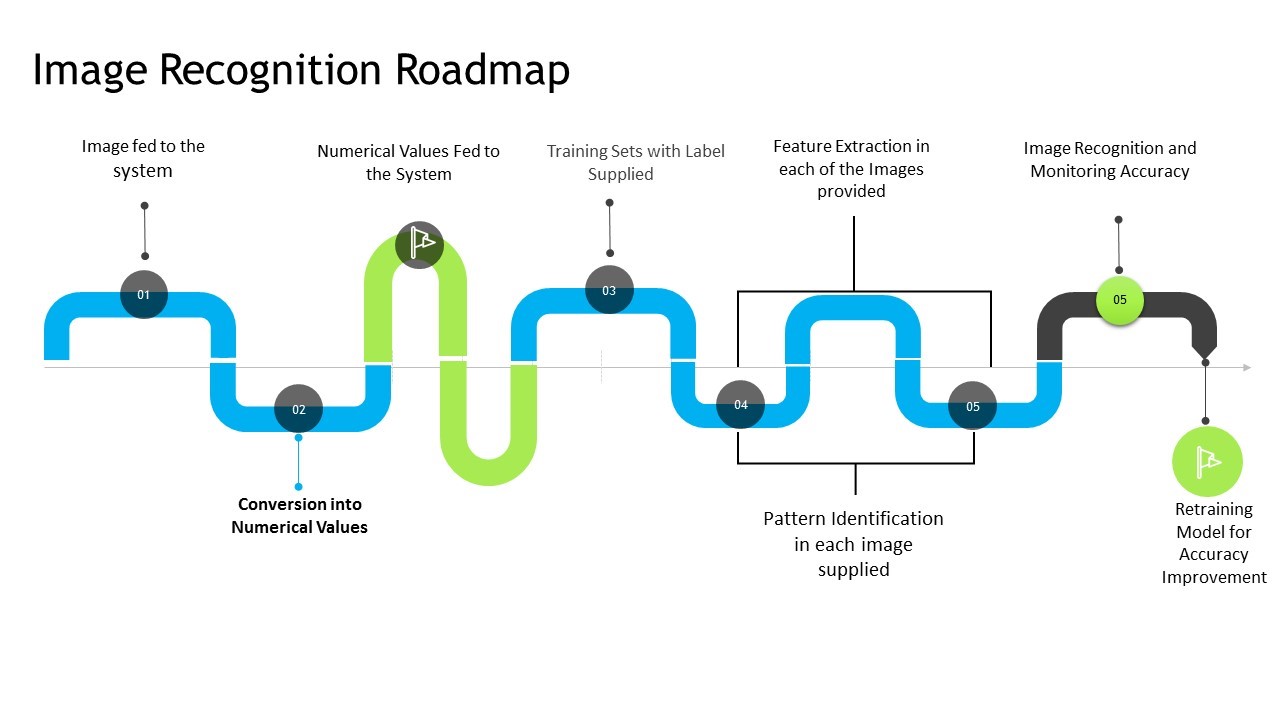

How does Image Recognition work?

A digital image represents a matrix of numerical values. These values represent the data associated with the pixel of the image. The intensity of the different pixels, averages to a single value, representing itself in a matrix format.

The information fed to the recognition systems is the intensities and the location of different pixels in the image. With the help of this information, the systems learn to map out a relationship or pattern in the subsequent images supplied to it as a part of the learning process.

After the completion of the training process, the system performance on test data is validated.

In order to improve the accuracy of the system to recognize images, intermittent weights to the neural networks are modified to improve the accuracy of the systems.

Some of the algorithms used in image recognition (Object Recognition, Face Recognition) are SIFT (Scale-invariant Feature Transform), SURF (Speeded Up Robust Features), PCA (Principal Component Analysis), and LDA (Linear Discriminant Analysis).

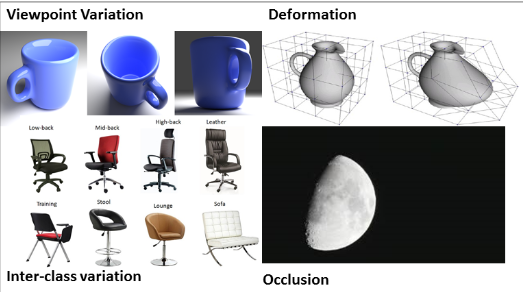

Challenges of Image Recognition

Viewpoint Variation: In a real world, the entities within the image are aligned in different directions and when such images are fed to the system, the system predicts inaccurate values. In short, the system fails to understand that changing the alignment of the image (left, right, bottom, top) will not make it different and that is why it creates challenges in image recognition.

Scale Variation: Variations in size affect the classification of the object. The closer you view the object the bigger it looks in size and vice-versa

Deformation: Objects do not change even if they are deformed. The system learns from the perfect image and forms a perception that a particular object can be in specific shape only. We know that in the real world, shape changes and as a result, there are inaccuracies when the system encounters a deformed image of an object.

Inter-class Variation: Certain object varies within the class. They can be of different shape, size, but still represents the same class. For example, buttons, chairs, bottles, bags come in different sizes and appearances.

RELATED BLOG

5 Ways to Utilize Artificial Intelligence in Retail for Enhancing In-store Customer Experience

Occlusion: Certain objects obstruct the full view of an image and result in incomplete information being fed to the system. It is necessary to devise an algorithm that is sensitive to these variations and consist of a wide range of samples of the data.

To train the neural network models, the training set should have varieties pertaining to single class and multiple class. The varieties available in the training set ensure that the model predicts accurately when tested on test data. However, since most of the samples are in random order, ensuring whether there is enough data requires manual work, which is tedious.

Limitations of Regular Neural Networks for Image Recognition

- The huge availability of data makes it difficult to process it due to the limited hardware availability.

- Difficulty in interpreting the model since the vague nature of the models prohibits its application in a number of areas.

- Development takes longer time and hence, the flexibility is compromised with the development time. Although the availability of libraries like Keras makes the development simple, it lacks flexibility in its usage. Also, the Tensorflow provides more control, but it is complicated in nature and requires more time in development.

Role of Convolutional Neural Networks in Image Recognition

Convolutional Neural Networks play a crucial role in solving the problems stated above. Its basic principles have taken the inspiration from our visual cortex.

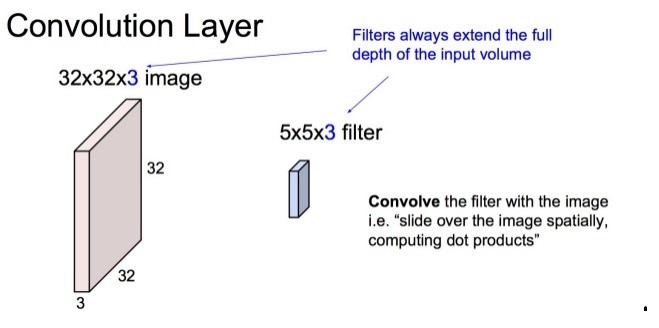

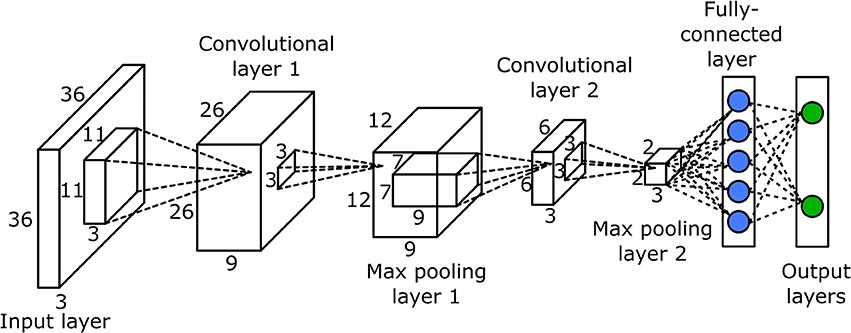

CNN incorporates changes in its mode of operations. The inputs of CNN are not fed with the complete numerical values of the image. Instead, the complete image is divided into a number of small sets with each set itself acting as an image. A small size of filter divides the complete image into small sections. Each set of neurons is connected to a small section of the image.

These images are then treated similar to the regular neural network process. The computer collects patterns with respect to the image and the results are saved in the matrix format.

This process repeats until the complete image in bits size is shared with the system. The result is a large Matrix, representing different patterns the system has captured from the input image.

This Matrix is again downsampled (reduced in size) with a method known as Max-Pooling. It extracts maximum values from each sub-matrix and results in a matrix of much smaller size.

These values are representative of the pattern in the image. This matrix formed is supplied to the neural networks as the input and the output determines the probability of the classes in an image.

RELATED BLOG

During its training phase, the different levels of features are identified and labeled as low level, mid-level, and high level. The low-level features include color, lines, and contrast. Mid-level features identify edges and corners, whereas the high-level features identify the class and specific forms or sections.

Thus, CNN reduces the computation power requirement and allows treatment of large size images. It is sensitive to variations of an image, which can provide results with higher accuracy than regular neural networks.

Limitations of CNN

DOWNLOAD WHITE PAPER

A Complete Guide to Chatbot Development – From Tools to Best Practices

Through complex architectures, it is possible to predict objects, face in an image with 95% accuracy surpassing the human capabilities, which is 94%. However, even with its outstanding capabilities, there are certain limitations in its utilization. Datasets up to billion parameters require high computation load, memory usage, and high processing power. Usage of which requires proper justification.

Uses of Image Recognition

- Drones:

Drones equipped with image recognition capabilities can provide vision-based automatic monitoring, inspection, and control of the assets located in remote areas. - Manufacturing:

Inspecting production lines, evaluating critical points on a regular basis within the premises. Monitoring the quality of the final products to reduce the defects. Assessing the condition of the workers can help manufacturing industries to have a complete control of different activities in the systems. - Autonomous Vehicles:

Autonomous vehicles with image recognition can identify activities on the road and take necessary actions. Mini robots can help logistics industries to locate and transfer the objects from one place to another. It also maintains the database of the product movement history to prevent the product from being misplaced or stolen. - Military Surveillance:

Detection of unusual activities in the border areas and automatic decision-making capabilities can help prevent infiltration and result in saving the lives of soldiers. - Forest Activities: Unmanned Aerial Vehicles can monitor the forest, predict changes that can result in forest fires, and prevent poaching. It can also provide a complete monitoring of the vast lands, which humans cannot access easily.

eInfochips’ provides solutions for artificial intelligence and machine learning to help organizations build highly-customized solutions running on advanced machine learning algorithms. Know more about our machine learning services.