Verification is the process of reviewing, inspecting, or testing hardware design in order to get the desired output. The whole process revolves around one question: is the spec matching the implementation? This question needs to be asked throughout the verification process.

Structure of verification process

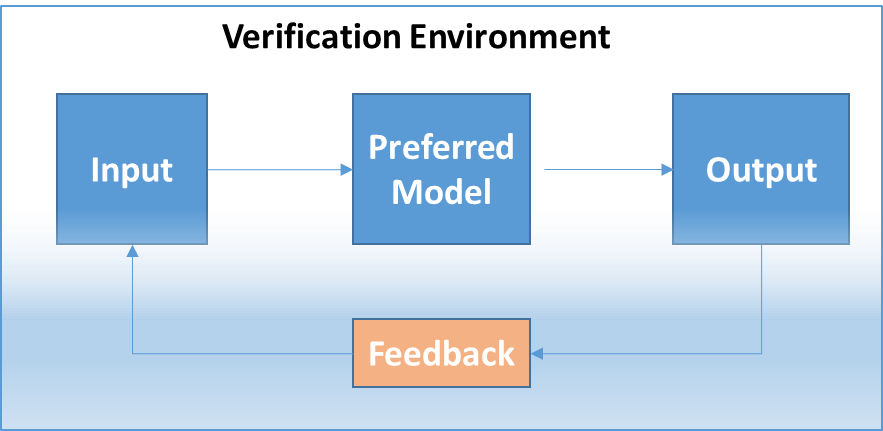

The very basic structure of any verification process is as follows:

Consider the case of RISC-V (pronounced ‘Risk-Five’). One can’t deny that RISC-V is a strong contender and has huge industry support, for instance, RISC-V events are attracting more exhibitors. Even after having decades of presence in the market, there is a lack in the adoption of RISC-V as an embedded core in standalone devices.

No certification and standardization process has been made yet and no plug-and-play is available at any point of the hardware or software stacks. Nevertheless, if we go back and check the history, this scenario is quite similar to that of Linux. Eventually, RISC-V too will reach the mass market through open collaboration across industry players, be it large or small.

Unlike software, you cannot find any open-source cores if you are working on an embedded project. We all have seen the universal change triggered by open source in the software industry. With an identical goal, RISC-V aims to cut off the patented architecture influence on processor design. RISC-V has unlimited potential for future growth as it delivers a new level of software and hardware freedom on architecture; like software, one does not need to rely on a single supplier.

As per RISC-V Foundation, “RISC-V is a free and open instruction set architecture (ISA) enabling a new era of processor innovation through open standard collaboration”. University of California, Berkeley, logically named the fifth classic RISC architecture as RISC-V. By cutting down the need to approach external memory for a lot of primary CPU chores, the royalty-free and non-restrictive RISC-V reduces energy usage and increases the speed to a great extent. RISC-V is a compacted ISA, perfect for embedded applications with higher performance and security, including low-power requirement.

RELATED BLOG

The first-ever ‘Made in India’ computer chips, SHAKTI processors, based on the RISC-V ISA, are targeted for real-world application and can be freely adopted by industries (Refer benefits of RISC-V to learn more). Xiaomi-backed Huami had launched Huangshan No. 1, a RISC-V wearable chipset, last year. Other examples are Nvidia and Western Digital; these companies are going to build their graphic cards and disk drives respectively, using RISC-V.

The test and verification is the most prominent task in silicon development and RISC-V platforms are no exception to this. Industry-leading verification tools and methods will have the upper hand for delivering RISC-V based solutions within stipulated time and cost criteria. Formal verification helps the industry to do so; it helps in discovering bugs that often go unnoticed with the traditional directed verification testing.

However, there are a bunch of challenges in doing verification of RISC-V. The most fundamental challenge of verifying any design is having higher controllability and observability. For that, stimulus – which could be a structure or some behavior – needs to be generated to activate a specific line of code. An additional stimulus is also required to be generated to propagate the previously generated line of code to an output where it can be observed. We add assertions, specifically low-level assertions, embedded inside the design. Assertions can also be helpful in identifying good behavior. Then a checker is useful in detecting correct or incorrect results.

Another major challenge associated with RISC-V is compatibility. Although there are already an outsized range of parties providing RISC-V cores – and this number can increase considerably in the foreseeable future – there is not a single central team coming up with designing and verifying these cores. Core suppliers and SoC developers have to overcome a lot of challenges in order to make designs that are compatible with each other, ISA-compliant, and which have thoroughly verified cores.

The final challenge that one needs to focus on is safety, security, and trust vulnerabilities. Core (and SoC) designs must be scrutinized for all these susceptibilities that could be exploited by someone desiring to take control of the system. The implications for applications like autonomous vehicles and military/aerospace could certainly be dreadful. Both core vendors and users must use a verification process that detects any such breaches of trust, security, and safety.

The possibilities of bridging this gap, even though difficult, is not an impossible task. The solution lies in uniform understanding of the issue. The above discussed threats lead to one inevitable solution – formal design verification. Formal verification is another verification method, with the same goal of eliminating design flaws. It uses formal tools and SystemVerilog Assertions (SVA). It validates or invalidates the intended algorithms with respect to a certain formal specification.

Firstly, formal verification not only provides fully compliant cores, but also identifies any unintended functionality beyond the ISA specification. This detects any hardware trojans, scans the design, and also shields it from security and trust vulnerabilities.

Secondly, safety is another front where formal verification helps; it weighs the core design and calculates the failing and failure metrics required by safety standards. Formal tools will prove not only that the design does what it is specified to do, but also check that it does not do what it is not expected to do and this provides better controllability and observability.

Additionally, formal verification is even better than the functional one. One has to generate a test case with drivers, monitor, models, and checkers while doing functional verification. Also, a lot of code must be drafted even before it enables you to write your initial test. On the contrary, there is no concept of writing driver, monitor, and test cases in formal verification. The tool takes care of generating all stimulus and reporting back if there is a failure; all you need to do is restrain the inputs appropriately and write checkers keeping the design specification in mind.

Wrapping Up

To conclude, the fact is that verifying RISC-V surely has complex problems of controllability and observability, compatibility, in addition to safety, security, and trust vulnerabilities. The formal verification, with the advantages discussed above, is surely a major improvement. It will be a suitable solution if implemented by the right set of people with the utmost care – the issues at hand can be mitigated to a large extent.

It is generally observed that formal verification, carried out by a third-party, is the best and most useful for the growth of the RISC-V ecosystem. This will enlarge the room for multiple core vendors to lead to a similar solution and with this RISC-V will be a tipping point for the semiconductor industry.

To get started with RISC-V verification, get in touch with us.