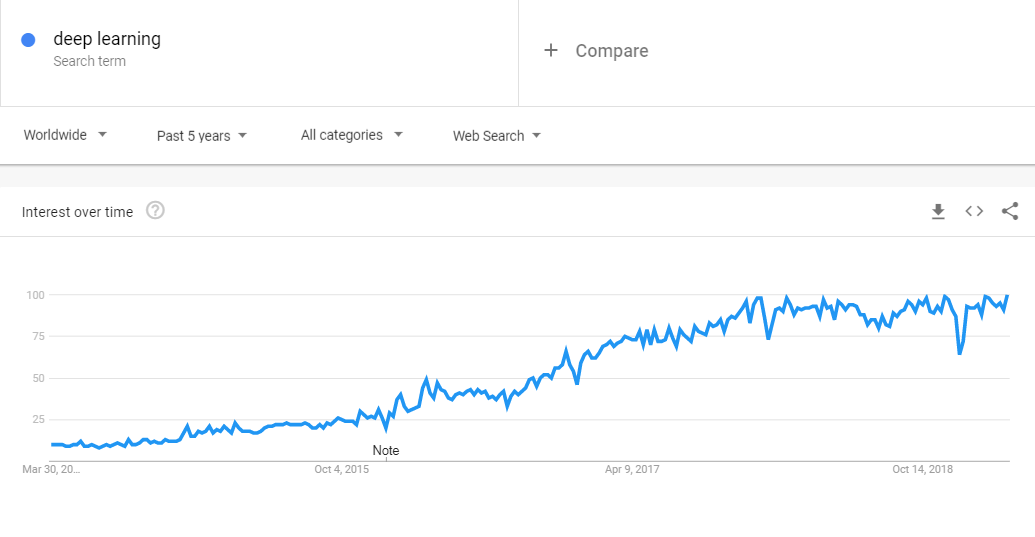

Deep learning is a trending topic worldwide. This wasn’t the case 7 years ago; back then, nobody was talking about it and nobody cared.

But it all changed with one research paper by Alex Krizhevsky and Ilya Sutskever from the University of Toronto, which was created under the guidance of the “godfather of AI”, Geoffrey Hinton. The paper proposed a ConvNet architecture that outperformed all previous traditional machine learning models on ImageNet dataset by a big margin. The architecture is called AlexNet.

After the concept of AlexNet came into existence in 2012, deep learning became the talk of the town. It accelerated the deep learning research and since then we never looked back. We are currently able to achieve human-level efficiencies on many tasks using deep learning, number and character recognition, face detection and recognition, object detection and classification, sentiment detection, and many other tasks.

The reason why deep learning algorithms work well compared to traditional machine learning algorithms is the automatic feature extraction ability of the neural networks. For traditional algorithms, a domain expert is required to do feature engineering; on the other hand, DNN (deep neural nets) delivers better results on raw data itself – no feature engineering is required.

It is fair to claim that deep learning can deliver great results given a good amount of data. The difficult part is to choose which deep learning framework to use.

In this article, we will explore the top 6 DL frameworks to use in 2019 and beyond. We will also look into industry demand and resource supply for each framework. Let’s dive right in:

1. TensorFlow

TensorFlow is a deep learning framework developed by the Google Brain team, which is written in Python, C++, and CUDA. It facilitates stable APIs for Python and C environment while alpha and beta stage APIs are available for C++, Go, Java, JavaScript, and Swift with non-guaranteed compatibility.

It also provides a lighter version named TensorFlow Lite to work within mobile devices.

Why TensorFlow?

- Distributed training capability

- Availability for web, mobile, edge, embedded, and more

- Visual debugging with TensorBoard

- Very good documentation and community support

- Great for both research and production

2. Keras

A high-level neural network library Keras does a pretty good job for deep learning. It is written in Python and is developed by a Google engineer named François Chollet. It has the backend support for TensorFlow, CNTK, Theano, and PlaidML. Additionally, it can be integrated with TensorFlow for faster prototyping.

Why Keras?

- Simple interface for deep learning

- Build a neural network with very few lines of code

- Very lightweight and easy to learn, along with brilliant community support

- Good for fast experiments and prototyping

- Support for multi-GPU training

3. PyTorch

PyTorch, the biggest competitor for TensorFlow is developed by Facebook and written in Python, C, and CUDA. PyTorch is based on Torch, an open source machine learning library written in Lua. This Python-first framework integrates Python and allows the use of any Python library to build neural network layers.

Why PyTorch?

- Easy to learn and apply compared TensorFlow

- Based on Python

- Debugging is easy with pdb, ipdb or PyCharm debugger

- Good option for research with dynamic computational graphs

- PyTorch 1 and above comes with good production support as well

4. Caffe

Caffe is deep learning framework developed by the Berkeley AI Research (BAIR) team. Caffe stands for Convolutional Architecture for Fast Feature Embedding. It is written in C++ and has a Python interface as well.

Why Caffe?

- Used by researchers, startups, and large scale industries

- Easy switch between CPU and GPU based computing

- Active development by contributors

- It comes with one of the fastest ConvNet implementations, inference time is about 1 ms/image

- Good cross-framework supports with others like PyTorch

5. MXNet

MXNet is adeep learning library by the Apache Software Foundation, which is sponsored by Apache Incubator. It is written in C++ and provides interfaces for C++, Python, Julia, Matlab, JavaScript, Go, R, Scala, and Perl. MXNet’s Python API comes with two high-level packages: Gluon API and Module API. MXNet is supported by AWS and Azure cloud.

Why MXNet?

- Easy to distribute on cloud infrastructure with powerful distributed computing

- Good choice for speech and handwriting recognition, NLP, and forecasting

- Trained models are highly portable and can be used in low-end devices like mobile phones

- Delivers easy model serving

- Good choice for enterprises

6. CNTK (Microsoft Cognitive Toolkit)

Microsoft cognitive toolkit is developed by Microsoft Research. It delivers speed, scale, and accuracy with commercial grade quality. It is written in C++ and has interfaces for Python, C++, JAVA, and C#.

Why CNTK?

- Support for user-defined functions on GPU

- Efficient GPU and CPU usage

- Provides both high-level and low-level APIs

- In-built support for Apache Spark

- Easy implementations for GANs and reinforcement learning

Additionally, if you are looking for performance benchmarking or speed comparison, here is a very good article from Analytics India Magazine. The article compares multiple frameworks on two different image processing models and one text processing model.

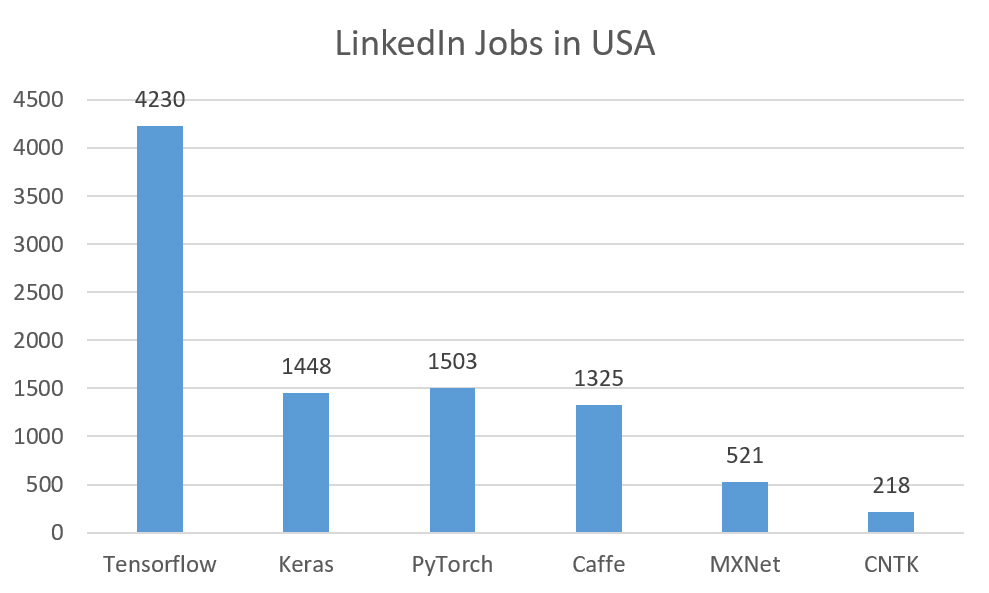

Industry Demand

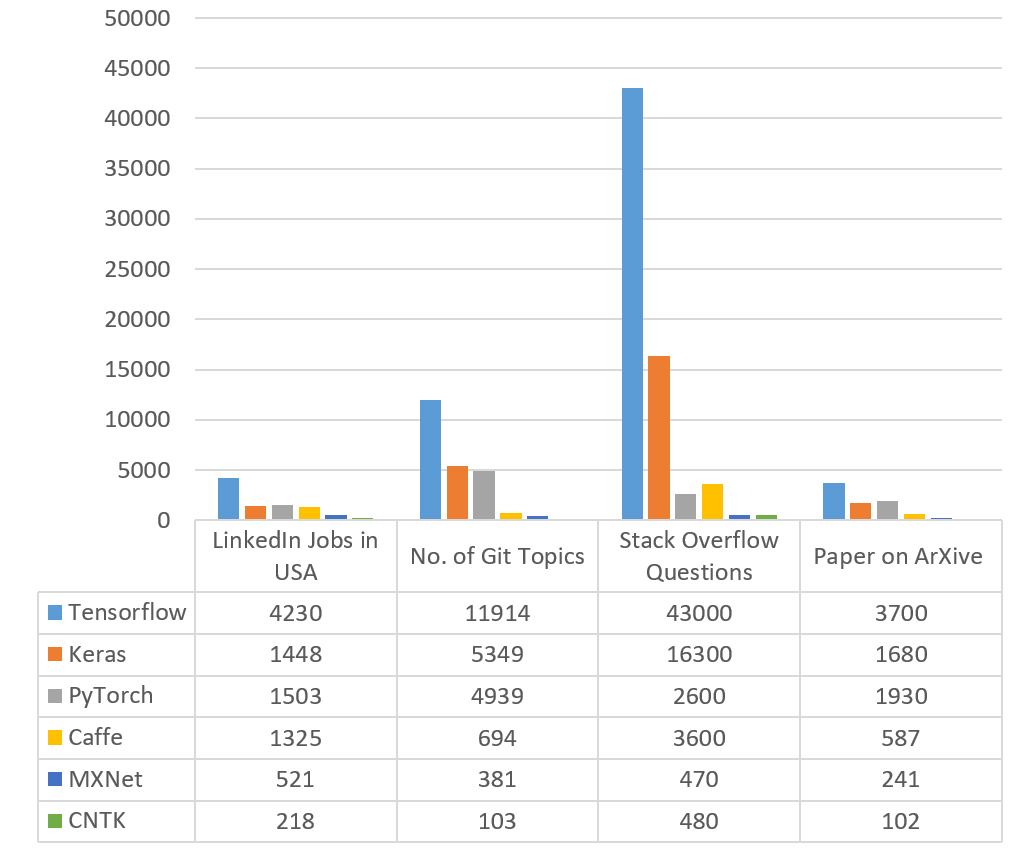

To know about industry demand, job posting for each framework is a good metric. To do this, I have extracted and used a number of jobs posted in the USA on LinkedIn (searched for a “framework” while keeping location “United States”).

Note: Job opening data and all other the data was collected on 29th March 2019.

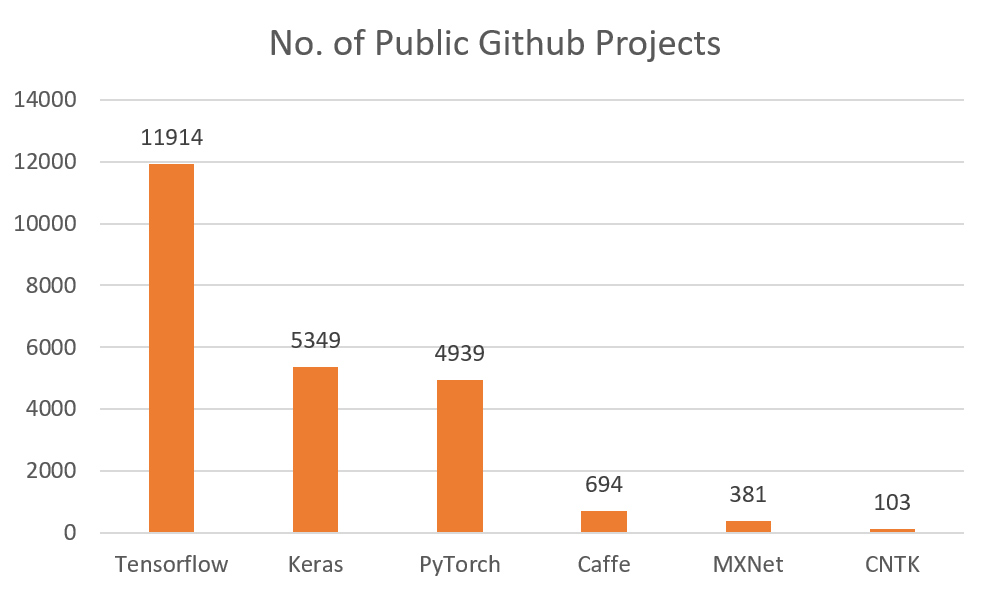

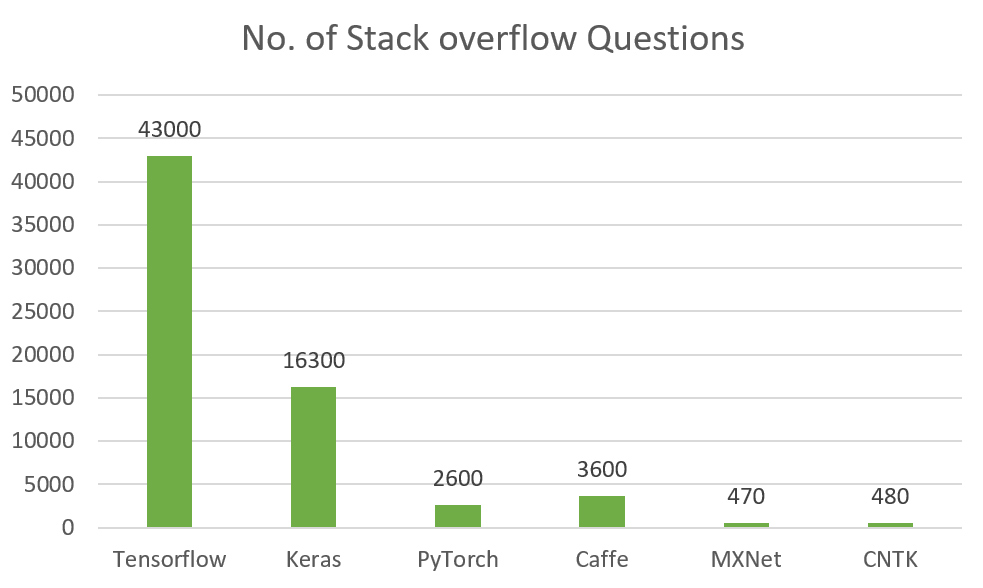

To understand supply of resources, I have collected 3 data points: number of Github projects for each framework, number of questions posted on stack overflow under framework topic, and number of papers published with each framework on arXiv.org during last 12 months.

1. GitHub topics

GitHub hosts projects on their platform, among which the public projects are visible to everyone. GitHub arranges projects under topics, we can access projects under frameworks with github.com/topics/”topic-name”. So for TensorFlow, it will be github.com/topics/tensorflow.

2. Stack overflow Questions

No. of stack overflow questions gives an idea about community engagements and with more questions asked and answered, it is easy to find solutions when stuck.

3. Papers on ArXiv

ArXiv.org is an online repository for published research papers managed by Cornell University and covers multiple fields of study. Data used here is a number of research papers archived with frameworks during the last 12 months.

As you can see, each framework has its own advantages and challenges. Framework selection depends a lot on kind of deep learning project, in-house expertise, and production scenarios.

If you are not sure what to use, then get in touch with us. We have expertise across all major frameworks and neural network architectures like VGGNet, AlexNet, Inception V3, SqueezeNet, etc. Also, our cloud platform partnerships with Azure, AWS, IBM Watson, and Google Cloud helps us to deploy enterprise-grade solutions.