Introduction

At present Android is mostly favored open-source general purpose operating systems. The android operating system is made based on Linux operating system – which is developed or integrated on the tablets, smart phones, etc. This article helps us to understand the implementation of audio functionality in android and where the respective source code is placed. The article elaborates behind the scenes, when we play the audio/videos on any of the media player android application, and we hear that audio.

About Android Audio Architecture

In general, Android audio architecture is an audio signal processing tool which involves multiple operations and is responsible to provides various functionalities like recording microphone audio, playing music, adding audio effects, real-time voice communication etc. The android audio architecture has the role to take care of multiple aspects such as supporting multiple hardware like HDMI, headset, speaker, earpiece, mic, Advanced Audio Distribution Profile( etc., Bluetooth SCO. Android audio architecture also has the role in supporting different software like VoIP (Voice over Internet Protocol) applications, media player/recorder, (Session Initiation Protocol) applications and phone calls.

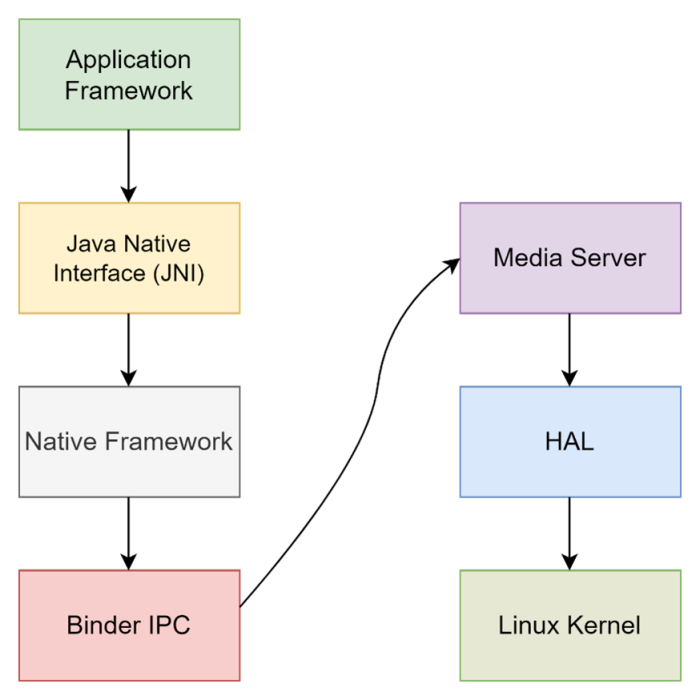

Block Diagram of Android Audio Architecture

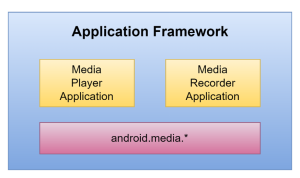

Application Framework

Application framework includes the app code, and this app code uses the android.media APIs to communicate with the audio hardware. e.g. voice recorder application, player application, etc. use the audio hardware. The APIs from android.media package can be set for managing audio files, or streaming audio from a remote source, playing or recording audio locally.

From android application the request has been sent to the application framework. The duty of this layer is to provide the top-level services to the applications. The top-level services are in the form of java classes. For example – application framework layer has activity manager, package manager, ringtone manager, content providers, etc. all these are in the form of java classes which will provide higher level services to applications.

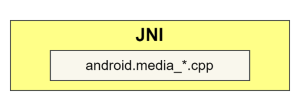

Java Native Interface (JNI)

JNI defines the way for the managed code (code written int the Java or Kotlin programming languages) to interact with native code (code written in C/C++). In android applications we need to use a C++ library, so to interact with it directly from Java code java native interface is used.

JNI is associated with android.media package, which is responsible to call the lower-level native APIs or code to access the lower-level audio hardware. From the application framework the requests are going to the native media player with the help of JNI. So, the JNI will connect application framework with C++ implementation. For example, all the application framework we write in the java so this JNI will help in converting this java return program to give C++ implementation which are present in native medial player.

The JNI is in the below path,

- frameworks/base/core/jni/

- frameworks/base/media/jni/

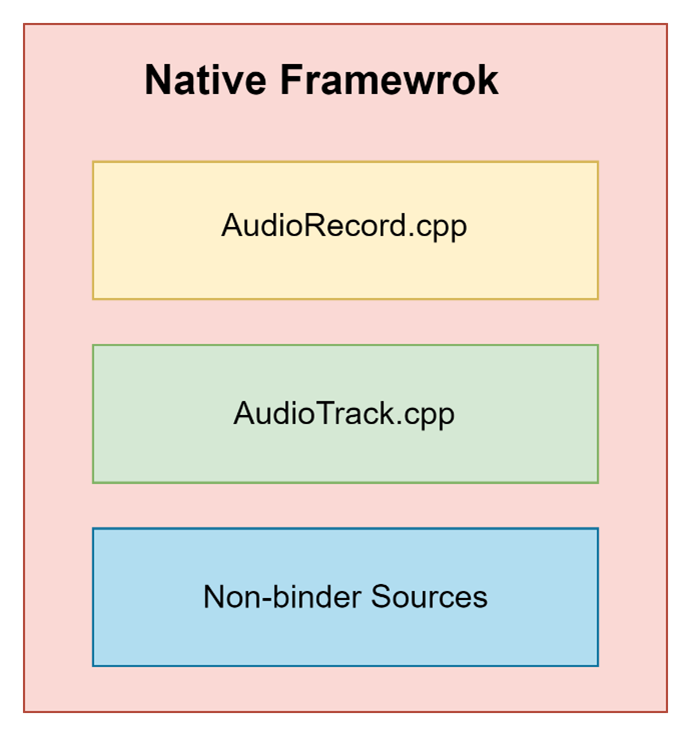

Native Framework

Native framework will provide native equivalent to android.media package. Usually called native APIs are placed in AudioTrack/AudioRecord C++ files. The duty of this API’s is to instantiate separate object which associate with each media file. Native framework gives the request to the media server with the help of IPC.

The Native framework code is placed inside the libmedia (Frameworks/av/media/libmedia).

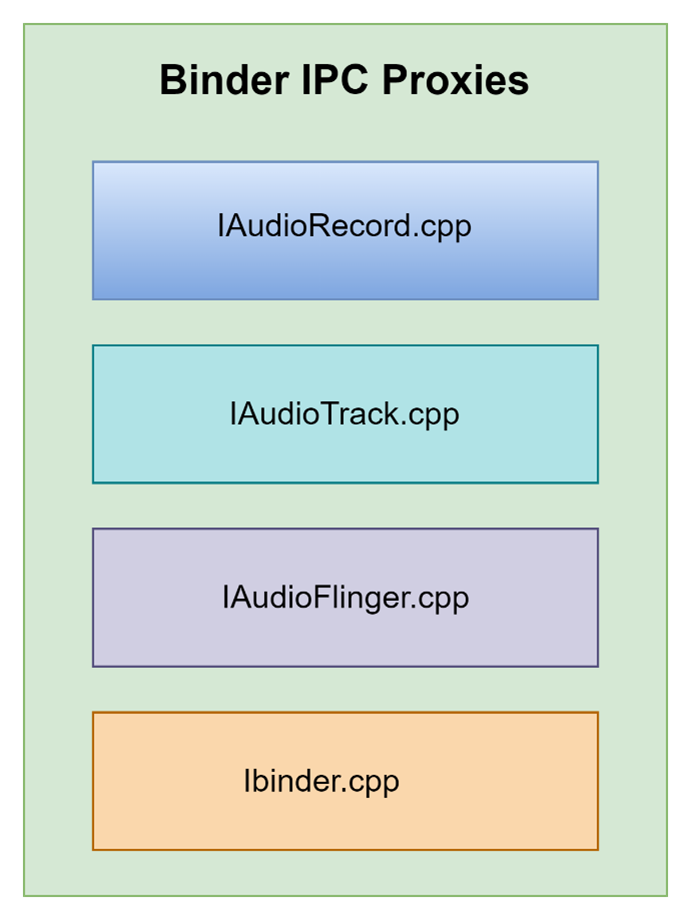

Binder IPC Proxies

It is responsible for facilitating the process of communication. The communication between native player or we can say native framework and the media server or media service player are happens IPC (Inter process communication). In this case Binder IPC mechanism is used. Native frameworks give the request to the media server by initiating the Binder IPC proxies to gain access to audio related services associated with the media server.

Binder IPC Proxies are in frameworks/av/media/libmedia and begin with the letter “I”.

About the Binder IPC in Android

This mechanism helps in allowing the application framework layer to cross process boundaries and call into the Android system services code. It will help to enable the high-level framework APIs to communicate with the android system services. Usually, android applications can run in their own space (process space). Android applications cannot access the underlying hardware or system resource directly. So, in this case android applications need to talk to the system services with the help of Binder IPC to access the underlying hardware or system resource.

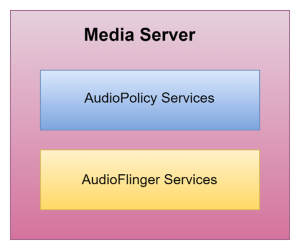

Media Server and its Services

Instantiation of media player service sub system involves creation of media player service object. Once the media service player is ready, once it is up and running, and once it receives request from the native medial player, a new media player service client is created for each request that manipulates media control. So, for every request client is created.

Audio Policy Manager

Android Audio Manager is a service provider, in other words it is a service which is responsible for all those actions who requires the policy decision to be made first. Policy decisions include stream volume management, new input/output stream management, re-routing, etc.

The audio policy service has following duties,

- To maintain the track of currently available system state which includes multiple user request, the current state of removable device and its connections, phone state, etc.

- The changes in the system state and user actions are notified to audio policy manager with methods of the audio_policy.

The configuration file for the audio policies is located in system/etc/. This configuration file holds the information about the audio device present on our product. The Audio service manager loads this configuration file (Audio_policy.conf) from the system/etc during the start.

We also can say that AudioPolicyService is policy builder, helps to decide a few of crucial factors which includes,

- When there’s ought to open the sound interface gadget.

- What kind of Stream-type audio corresponds to what kind of device etc.

The source code of audio policy manager or service is located in, frameworks/av/services/audiopolicy.

AudioFlinger

AudioFlinger plays an important role in the entire audio system in Android, or we can say that the AudioFlinger is the is the core of the Android audio system. AudioFlinger is the system service, begins at the boot time and is responsible for enabling the platform for the use cases that are associated with the sound.

Below are the ways which shows how AudioFlinger enables the use cases those are linked with platform,

- Give the get to interface for the upper layer for utilizing sound.

- It will utilize the HAL to manage the sound gadgets.

Through the service manager other processes can access the AudioFlinger and call the different functionality like openOutput, createTrack and series of interface to drive the AudioFlinger to perform the actual audio processing.

The Audio system or AudioFlinger supported audio interfaces falls into three categories,

AudioFilnger contains the actual audio services which are resides in the media server. The AudioFlinger source code is real code which communicates with the audio HAL implementation. Processing of audio takes place in the audio flinger with the help of playback threads.

The AudioFlinger code is located, frameworks/av/services/audioflinger

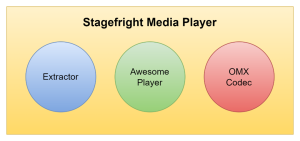

Role of Stagefright (stagefright media player)

All the requests from the media service or media service player come to the component called stagefright media player. At the native level stagefright plays a role of playback engine for the media, it has built-in program-based codecs for prevalent media designs. So, this stagefright has three components: Extractor, Awesome player & OMX codec.

Media extractor involves appropriate data parsers in accordance with media types, so it will extract data in the required format e.g. MPEG, MP3 etc.

Awesome player sets communication between the different component, whatever parsed data coming out of the extractor that will act as a input to the awesome player and this awesome player includes functionality like video captioning, connecting different audios and videos, providing sources with appropriate decoders, playing media, pausing media, starting media, synchronizing media, restarting media.

OMX decoder: To prepare playback Awesome player uses OMX codec component to setup the decoders to use for each data source. So, the OMX codec component contains the decoder, and the job of the decoder is to prepare for the playback purposes, so using this OMX codec final playback is prepared. So, from this OMX codec the output or the next control goes to the kernel driver which will interact with the hardware and HAL layer implementation.

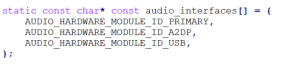

Hardware Abstraction Layer (HAL)

Hardware Abstraction Layer (HAL) characterizes the classic interface on which audio services depend. The hardware manufacturers or vendors must implement this interface for audio hardware to function correctly. Android’s audio HAL is responsible to connect the top-level sound specific framework related methods available in android.media to the elemental audio related driver and hardware. The code for audio HAL is placed in hardware/libhardware/include/hardware.

There are two interfaces for the Audio HAL,

- The interface which represents the main functions of an audio device.

hardware/libhardware/include/hardware/audio.h.

- The interface which represents effects which can be applied on audio like echo cancellation effect, noise suppression, downmixing effect, etc.

hardware/libhardware/include/hardware/audio_effect.h.

Linux Kernel

Linux Kernel is the brain of android architecture. It controls all accessible drivers like Bluetooth, memory, display, audio, camera, etc. those are needed at the runtime. It also gives an abstraction layer between the device hardware and additional components of android architecture. This abstraction layer is responsible for management of power, memory, devices etc. Linux kernel acts as a bond between the system and android application and helps to manage the security between them.

Linux kernel consists of the audio driver that interacts with audio hardware and HAL implementation. Common audio drivers like ALSA (Advanced Linux Sound Architecture), OSS (Open Sound System), or custom driver can be used.

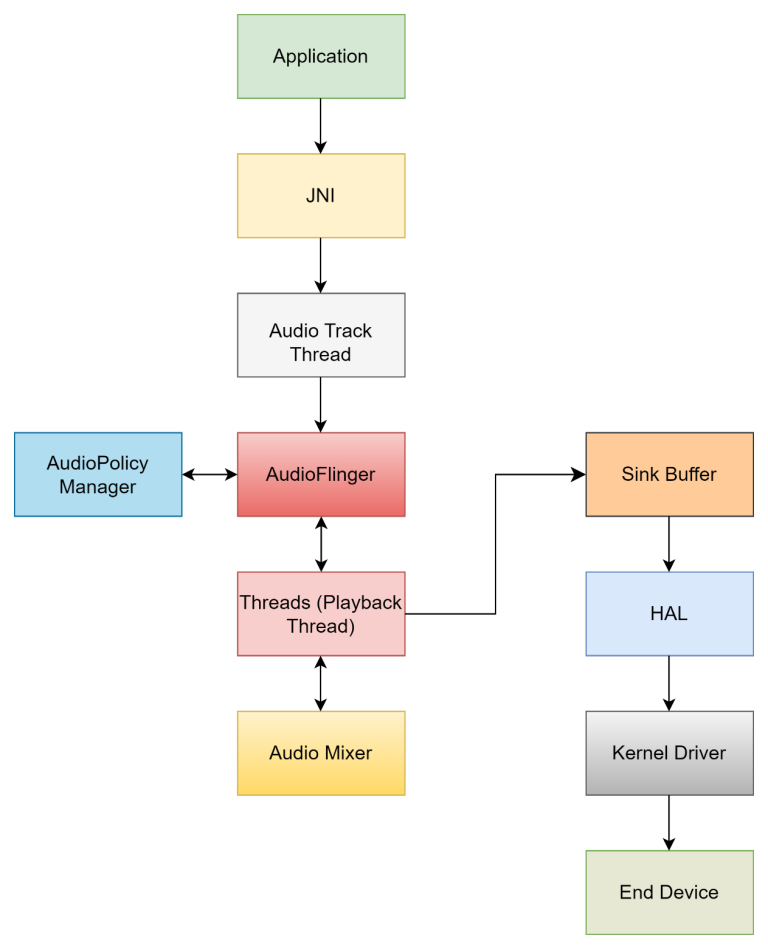

Android Audio Code Flow

In the Android Audio Architecture, we saw in detail the architecture of Android Audio Framework. Based on this, the above figure shows the actual audio code flow, which involves flow from playing the audio on any medial player android application to hearing that audio on the end device. End device may include internal speaker, Bluetooth, wired headphones.

The role of Audio Mixer is that the data coming from the multiple input sources can be aligned and mixed before producing the output. Audio Mixer can perform actions such as mixing multiple audios, trimming the audio, controlling the volume of audio, etc.

AuduiFlinger processes the audio through the playback thread and the processed data is further getting stored into the sink buffer, further sink buffer data is getting forwarded to the HAL and then to the end device via Linux kernel driver (i.e. end device driver).

Conclusion

Android audio architecture is basically how the audio processing is actually performing on the android device. Android internal framework can take care of the actual audio as well as audio related things like, playing audio, pausing audio, restarting audio, changing the volume state, etc. Every layer in the Android audio architecture plays an important role in the audio processing.

eInfochips has a highly capable engineering team that specializes in providing top-notch software services for Android media, Android audio, and Android auto. With a focus on excellence and innovation, our skilled professionals leverage their expertise to deliver cutting-edge solutions in the dynamic realm of Android technology. From crafting robust Android media applications to ensuring seamless audio integration and optimizing the Android auto experience, our team is dedicated to meeting and exceeding client expectations. With a commitment to quality and a deep understanding of the Android ecosystem, eInfochips stands as a trusted partner for those seeking tailored and reliable software services in the ever-evolving landscape of Android development.